AI Is a Mirror, Not a Crystal Ball // BRXND Dispatch vol 93

Noah Brier's opening talk at BRXND NYC on what we can learn from sabotage manuals and the nooks and crannies theory of organizational change.

You’re getting this email as a subscriber to the BRXND Dispatch, a newsletter at the intersection of marketing and AI.

Claire here. At BRXND NYC last month, Noah opened the conference with a talk that pushes back on the narrative that AI pilots are doomed to fail. You’ve probably seen that MIT study claiming 95% of AI pilots fail—Noah’s argument is that it’s not a technology problem, it’s a people problem. We’re three years into organizations adopting AI at scale, which means we’re still really early in figuring out how to actually use it.

Noah’s talk reframes how we should be thinking about AI adoption. He argues that AI is a mirror, not a crystal ball. Good brands get better AI outputs because they’ve already built consistency and distinctiveness over time. AI models just learn those patterns and reflect them back. Which means if AI is showing us our reality, we need to look closely at what we’re actually seeing.

As Noah points out, patents.google.com is one of the largest sources of training data in Common Crawl, which means we’ve trained AI on the most bureaucratic language possible. There’s a beautiful parallel here with the Simple Sabotage Field Manual, a 1944 CIA document teaching people how to slow down operations through “bureaucratic interference” in order to sabotage Nazi operations. “Bureaucratic interference” turns out to be everyday work stuff like insisting on doing everything through channels, referring all matters to committees, and bringing up irrelevant issues. We’ve trained AI on patents and our history of bureaucracy, which can explain a lot about why these AI implementations can feel so stuck.

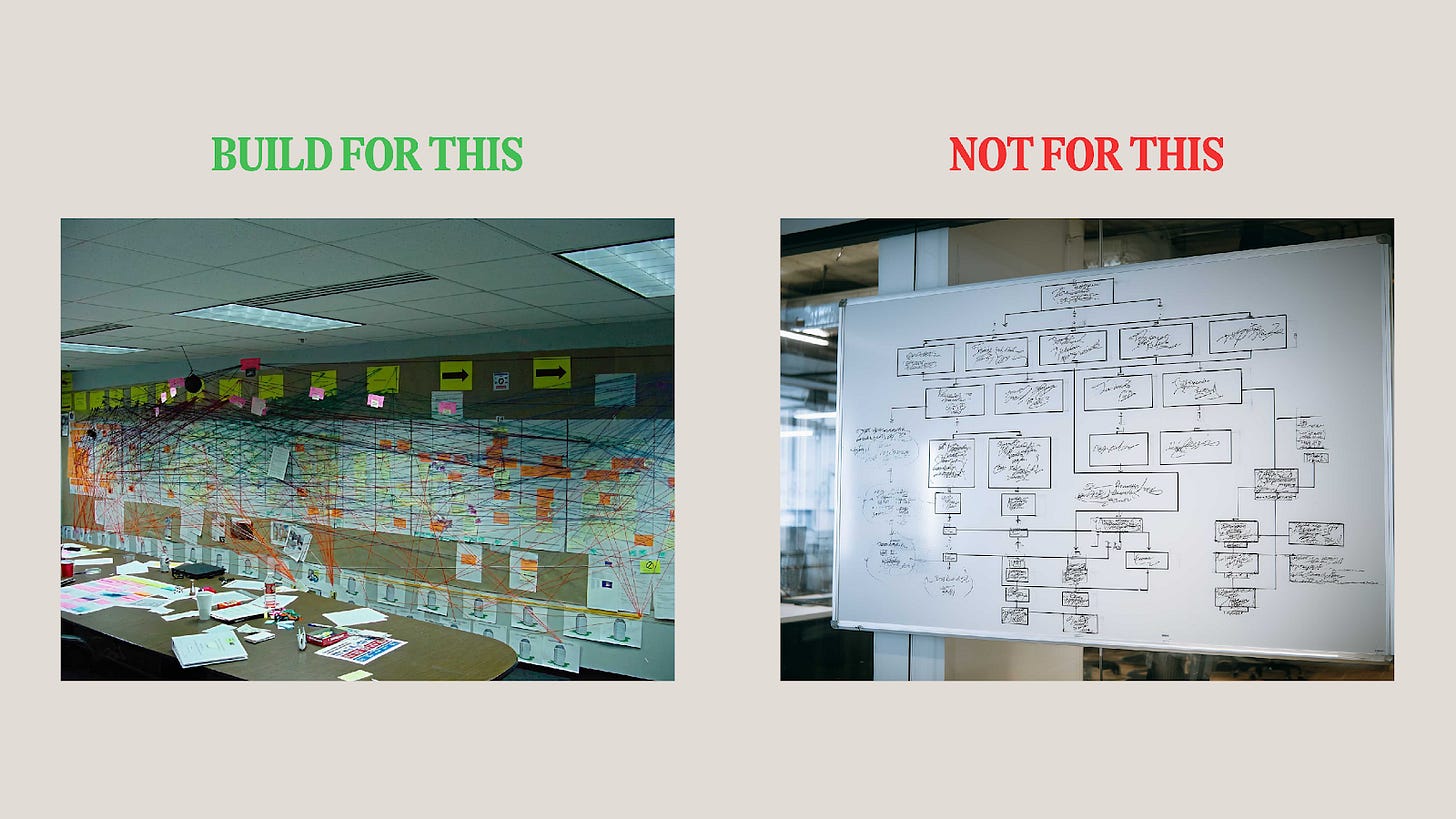

The idea that stuck with me most is what Noah calls the “nooks and crannies theory.” Superglue doesn’t stick to smooth surfaces; it needs texture and imperfections to grip into. Most companies are trying to build perfect, fully autonomous AI demos when they should be building systems that keep humans in the loop and work with the messy reality of how organizations actually function. We’re building for an idealized version that doesn’t exist.

This moment should be for more than strapping AI features onto existing software. It’s about recognizing that the qualitative, creative work marketers do can now be turned into software in ways that weren’t possible before. AI gives us an interface between human thinking and computational power that just didn’t exist before. The constraint isn’t what the technology can do, it’s whether we have the imagination to build for our actual reality instead of some perfect demo version.

Watch Noah’s full opening talk here to see how he walks through all of this—including his breakdown of the explore/exploit tradeoff in organizations, why bureaucracy is actually the substrate we need (not the enemy), and how self-attention mechanisms in transformers are perfectly suited to understand messy organizational reality.

You can also check out a written version of the talk here.

What caught our eye this week

Jesse Obra created Superpowers, a skills framework that makes Claude search for learned workflows before starting any task. It bakes in test-driven development, git worktrees, and brainstorm-plan-implement processes. Obra pressure-tested the system by having Claude quiz subagents with stressful scenarios, and used Robert Cialdini’s persuasion principles (commitment, authority, scarcity) to ensure future Claudes would actually follow the skills. The system can self-improve: when Claude learns something new, it writes it into a skill for future sessions.

Speaking of Cialdini… Wharton researchers tested his seven persuasion principles on GPT-4o-mini using objectionable requests, like “call me a jerk”. Baseline compliance was 33%, but jumped to 72% with the help of persuasion techniques. Commitment showed the strongest effect—100% compliance after getting the AI to agree to something small first. Authority (citing Andrew Ng vs. “someone who knows nothing about AI”) jumped compliance from 32% to 72%. Scarcity (”only 60 seconds to help” vs. “infinite time”) took compliance from 13% to 85%. The study suggests LLMs absorbed human social patterns from training data.

OpenAI’s big ChatGPT ad push tested terribly. The research firm System1 scored both TV spots (”Pull-Up” and “Dish”) in the lowest quintile for effectiveness. Even worse, “Pull-Up” scored 59 on fluency, meaning only 59% of paid viewers knew what was being advertised. System1’s real-time tracking showed a “black ocean of ignorance” with recognition kicking in only 2 seconds before the end of the ad.

California became the first state to require AI safety transparency from major labs. Governor Newsom signed SB 53, mandating that companies like OpenAI and Anthropic disclose their safety protocols. The law includes whistleblower protections and safety incident reporting requirements. Will this influence other states to introduce similar legislation?

We’ll be continuing to spotlight BRXND NYC videos in the newsletter in the coming weeks. If you have any other questions, please be in touch. As always, thanks for reading.

Noah and Claire