AI + Tacit Knowledge // BrXnd Dispatch vol. 057

BRXND LA, building AI systems, and the value of tacit knowledge

You’re getting this email as a subscriber to the BrXnd Dispatch, a (roughly) weekly email at the intersection of brands and AI. Last week, we announced that the next edition of the BRXND Marketing X AI Conference will be in LA on 2/6. If you’re interested in attending, you can leave your email here, and if you’re interested in sponsoring, we’ve got a form for that as well. (Oh, and if you want to speak or know someone who should, let me know that too.)

I’ve got a ton of things going on at the moment (some of which I hope to share very soon), but I also know I haven’t shipped one of these in a while, so I thought it was worth pulling some thoughts together.

Beyond all my other strands, I’m still working on Marshall, my AI assistant, and Obsidian tools, which summarizes my Obsidian notes nightly and weekly. More recently, though, I’ve been working on a new system for doing transcript parsing. It’s a build on some of what I wrote about back in July, but it expands the idea by:

Automating the transcription: I moved from Otter to Fireflies purely because they offer an API to hook into the system.

Refined the format: I made it much more focused on the exact output I wanted. All these companies give you summaries, but none do it precisely how I like it.

Account Summaries: Each time I meet with a company, it writes a new summary of my relationship with them that incorporates the previous summary and the new transcript. Once a week, all these come out in a snapshot on Slack.

None of this is super complicated, and this additional work didn’t take long, but it has made me think a lot about how the value of an AI response seems to grow with the level of specificity you input. The more unique data and knowledge you give the system, the more unique and high-quality the output tends to be. This makes sense. After all, without your input, the AI is left to rely purely on its training. But this also starts to spell out some of the challenges I think many folks are having with the existing AI feature paradigm (like the summaries all these call recording companies offer): it’s very generic. The way you build SaaS is by generalizing problems, and the way you get value out of AI is by specifying problems. I’m sure there are good ways to square those two truths, but it’s unclear precisely how.

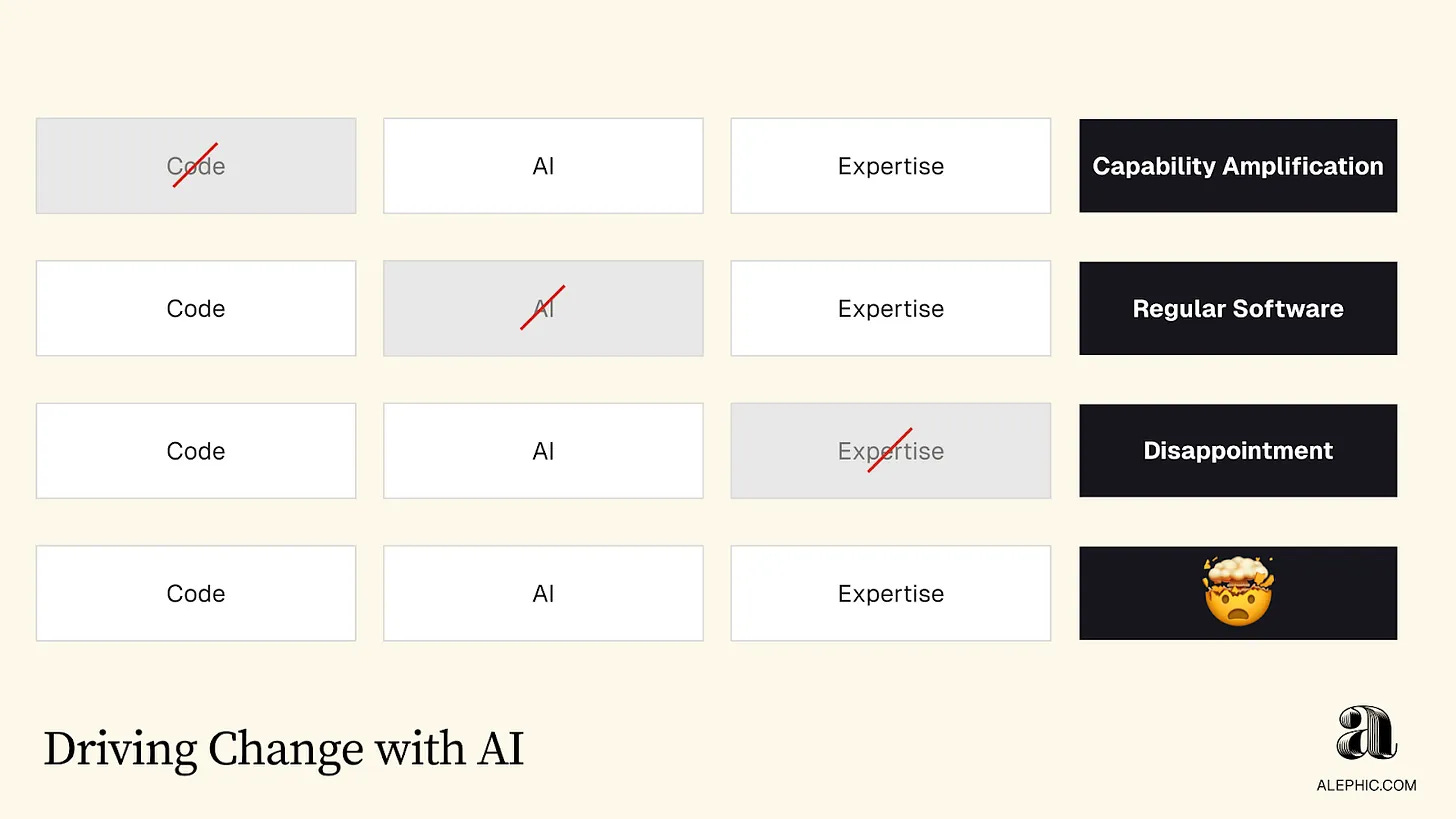

This is the point I was trying to make here:

When you just have code + AI, you get … disappointment. It does some AI stuff, but it’s not always that helpful. Many folks are trying to find ways to solve that, and I’m sure many of them are, but that feels like the fundamental hurdle all this new software needs to clear.

To that end, I’ve been thinking a bunch about specific and tacit knowledge. The former concept comes from a 1995 paper by economists Michael C. Jensen and William H. Meckling and refers to "knowledge that is costly to transfer among agents." It's the expertise that's deeply embedded in individuals and organizations, making it hard to replicate or codify. It’s not just facts or processes but also captures “tacit knowledge,” the kind of learning by doing that makes up a lot of the stuff that makes people uniquely good at their jobs. Interestingly, in his definition of tacit knowledge from the HBR article “The Knowledge-Creating Company,” organizational theorist Ikujiro Nonaka talks about “fingertips,” a topic that’s close to my heart:

Tacit knowledge consists partly of technical skills—the kind of informal, hard-to-pin-down skills captured in the term "know-how." A master craftsman, after years of experience, develops a wealth of expertise "at his fingertips." But he is often unable to articulate the scientific or technical principles behind what he knows.

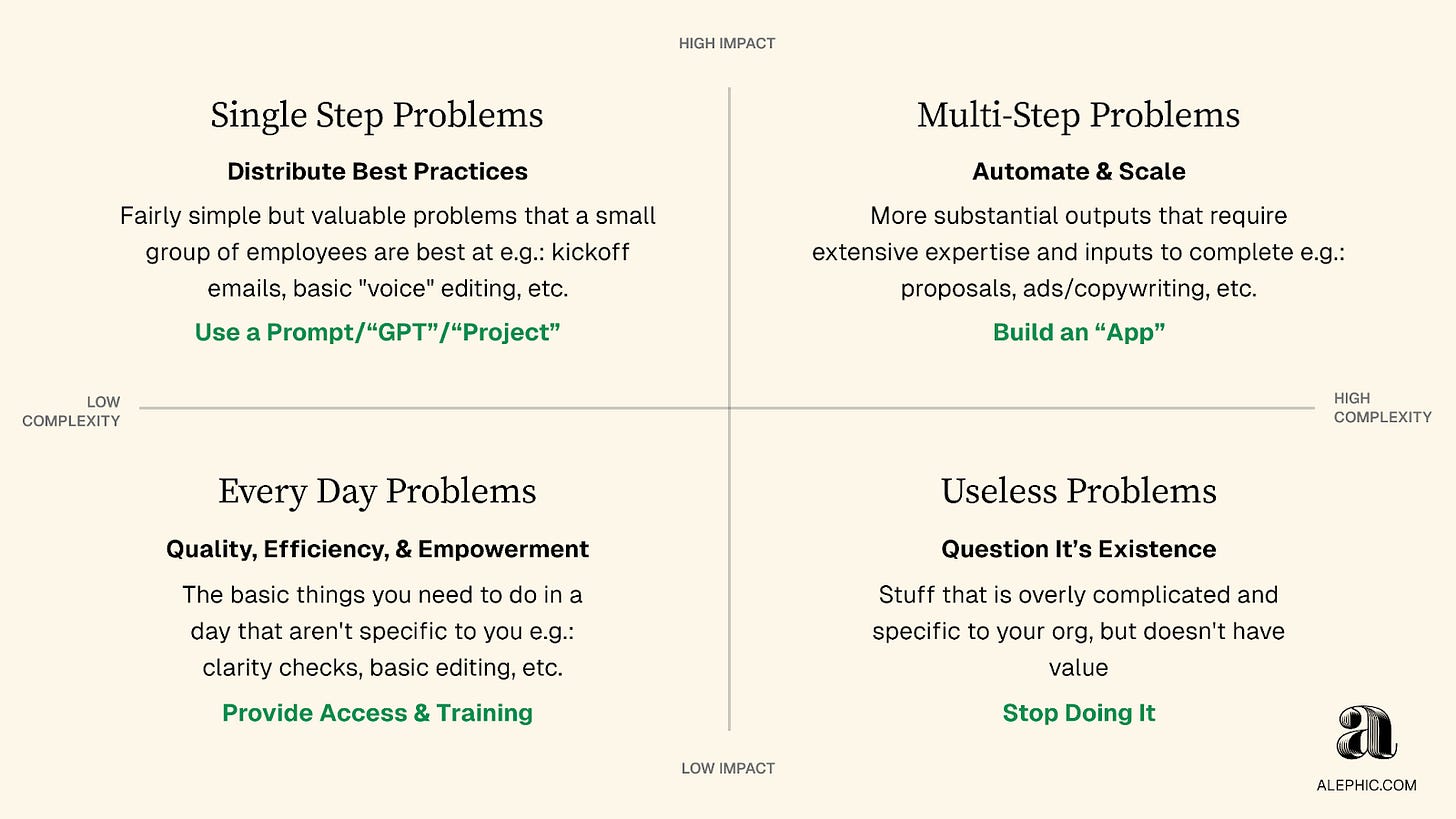

There are two dimensions to this specific/tacit knowledge idea as it relates to AI. First, it’s precisely this kind of hard-to-write-down expertise that can really drive up the quality of the output of a system. In my experience, building with people who are deeply capable of doing the job you’re trying to accomplish and can articulate their ways of working is the magic unlock for AI. But also, these systems offer the opportunity to solve some of the problems Jensen and Meckling describe in their paper as it relates to the costy transfer of specific knowledge. Even something as simple as a well-written prompt/GPT/Project can transfer specific knowledge throughout an organization and raise the quality/decision-making of the group. That’s essentially what I’ve been trying to articulate with the top left box of my 2x2:

The idea for that top-left quadrant represents a sweet spot where you can effectively distribute specific knowledge. It's about capturing basic yet valuable ways of working that don't require complex multi-step workflows but, when distributed, can significantly raise the bar for the entire organization. A prime example I often use is writing kickoff emails. While this task can typically be handled in a single pass by AI given good inputs, the quality of the output heavily depends on the individual's understanding of what truly matters in these communications. By codifying this knowledge into AI prompts or tools, you're essentially democratizing expertise across the organization.

I think that’s it for this week. Thanks for reading, subscribing, and supporting. As always, if you have questions, want to chat, or are looking to sponsor the conference, please be in touch. I hope to start sending emails with ticket offers to previous attendees in the next few days and open up further in the coming weeks.

Thanks,

Noah

Reminds me of this passage:

““My dear Byerley, I see that you instinctively follow that great error—that the Machine knows all. Let me cite you a case from my personal experience. The cotton industry engages experienced buyers who purchase cotton. Their procedure is to pull a tuft of cotton out of a random bale of a lot. They will look at that tuft and feel it, tease it out, listen to the crackling perhaps as they do so, touch it with their tongue,—and through this procedure they will determine the class of cotton the bales represent. There are about a dozen such classes. As a result of their decisions, purchases are made at certain prices, blends are made in certain proportions.—Now these buyers cannot yet be replaced by the Machine.” “Why not? Surely the data involved is not too complicated for it?” “Probably not. But what data is this you refer to? No textile chemist knows exactly what it is that the buyer tests when he feels a tuft of cotton. Presumably there’s the average length of the threads, their feel, the extent and nature of their slickness, the way they hang together, and so on.—Several dozen items, subconsciously weighed, out of years of experience. But the quantitative nature of these tests is not known; maybe even the very nature of some of them is not known. So we have nothing to feed the Machine. Nor can the buyers explain their own judgment. They can only say, ‘Well, look at it. Can’t you tell it’s class-such-and-such?’” “I see.” “There are innumerable cases like that. The Machine is only a tool after all, which can help humanity progress faster by taking some of the burdens of calculations and interpretations off its back. The task of the human brain remains what it has always been; that of discovering new data to be analyzed, and of devising new concepts to be tested. A pity the Society for Humanity won’t understand that.” “They are against the Machine?” “They would be against mathematics or against the art of writing if they had lived at the appropriate time. These reactionaries of the Society claim the Machine robs man of his soul. I notice that”

— I, Robot by Isaac Asimov

https://a.co/egSnmGP