Building Intuition in Marketing & AI // BrXnd Dispatch vol. 024

The first of the BrXnd conference videos is now live.

You’re getting this email as a subscriber to the BrXnd Dispatch, a (roughly) bi-weekly email at the intersection of brands and AI. I am working on finalizing SF Conference dates and should have those in the next few weeks. As always, if you have any questions, you can reply to this email or send me a note.

Hi all, and greetings from Italy. I got some great feedback from being back in the swing of this email from many of you, so thanks for the encouragement. Since I’m on vacation this week, I’m keeping things light and sharing my talk from May’s BrXnd Conference NYC.

The theme of the talk was all about the process of building intuition around these new tools (a build on a post I wrote for this newsletter in February). The talk was an attempt to explain why I was putting on the day—but also why I fundamentally believe we’re all just learning and there are no real experts in this space (despite quite a bit of noise to the contrary).

Here’s the meat of the thesis on intuition. It’s not exactly what you’ll hear in the video (which is embedded at the bottom), but it gets at the key point I wanted to communicate to open the day.

So why, exactly, are we here?

I’ve been pretty disappointed by the state of the discourse around AI, particularly in the marketing industry. When I look around, I see three general themes:

ChatGPT Tips: “23 Tips to Use ChatGPT to Write Better SEO Headlines for Roadside Motels”

AI Replacing Jobs: “The AI Art Director/Copywriter/Office Puppy”

AGI & The End of Human Civilization: “AGI is Ready to Slurp our Brains”

While it’s totally possible there are good ideas in those articles (possible, not probable), none of them represent the magic of this moment for me. The last six months have been some of the most creatively fulfilling of my life as I’ve integrated AI into nearly every part of my workflow. I use Github Copilot as a coding assistant, I turn to ChatGPT for deeper questions about coding, for help understanding research that’s beyond my technical grasp, and, most helpfully, for dealing with tasks I despise, like writing agreements. Meanwhile, diffusion models like Midjourney generated the abstract patterns attached to each talk for the conference, AI removed the background for each speaker photo, and countless little scripts I’ve written do everything from summarizing bios to cleaning up transcripts using AI.

I feel like I have a superpower. This is the most significant creative accelerant I’ve ever experienced.

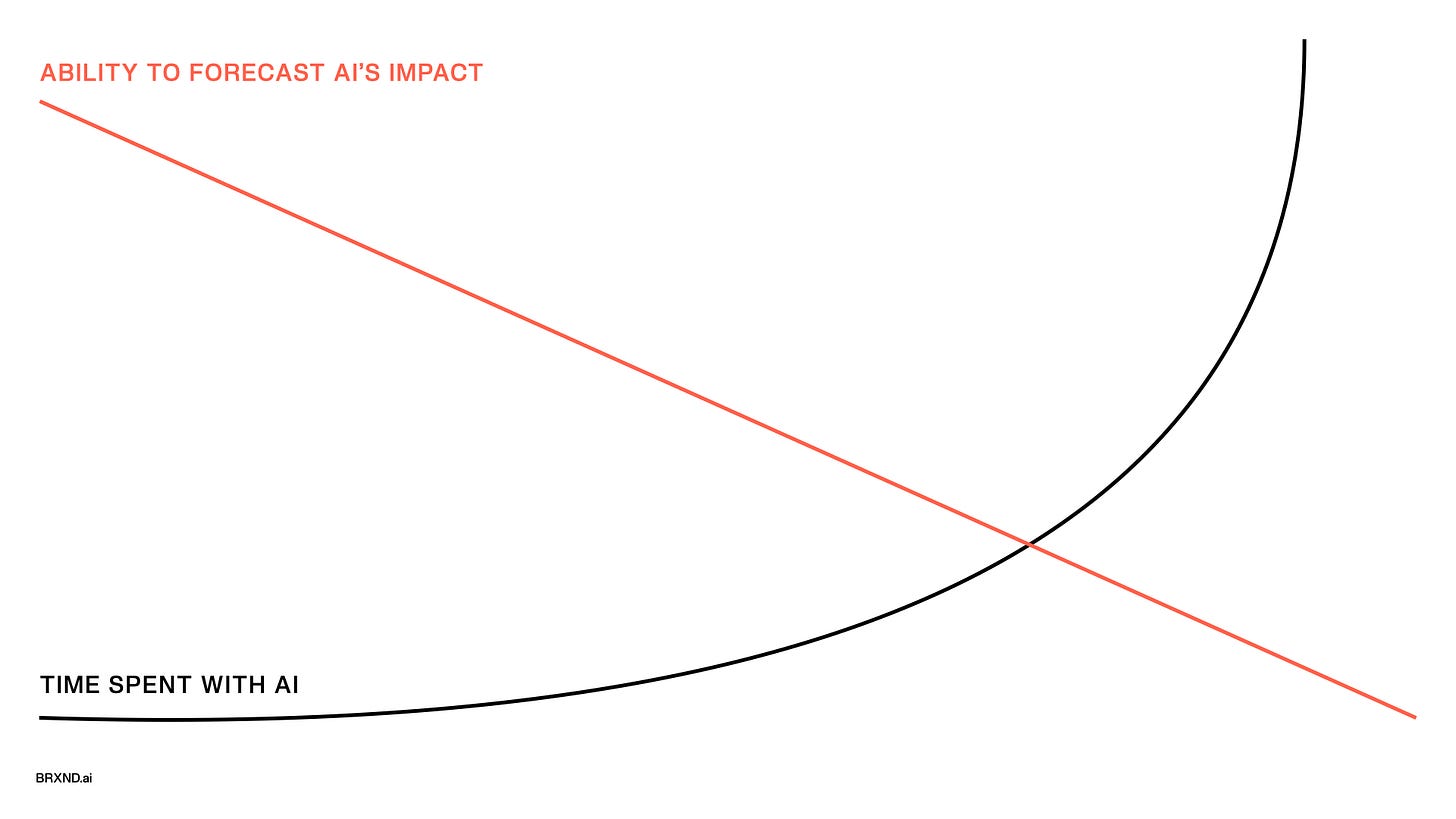

But it’s also left me—and I’m assuming many of you—a bit confused. For as much time as I’ve spent building and working with this technology over the last year, I don’t feel like it’s given me some great foresight for how it’s going to change society or even our industry. On the contrary, paradoxically, the more time I spend with my hands on AI, the less capable I feel of forecasting its future impact.

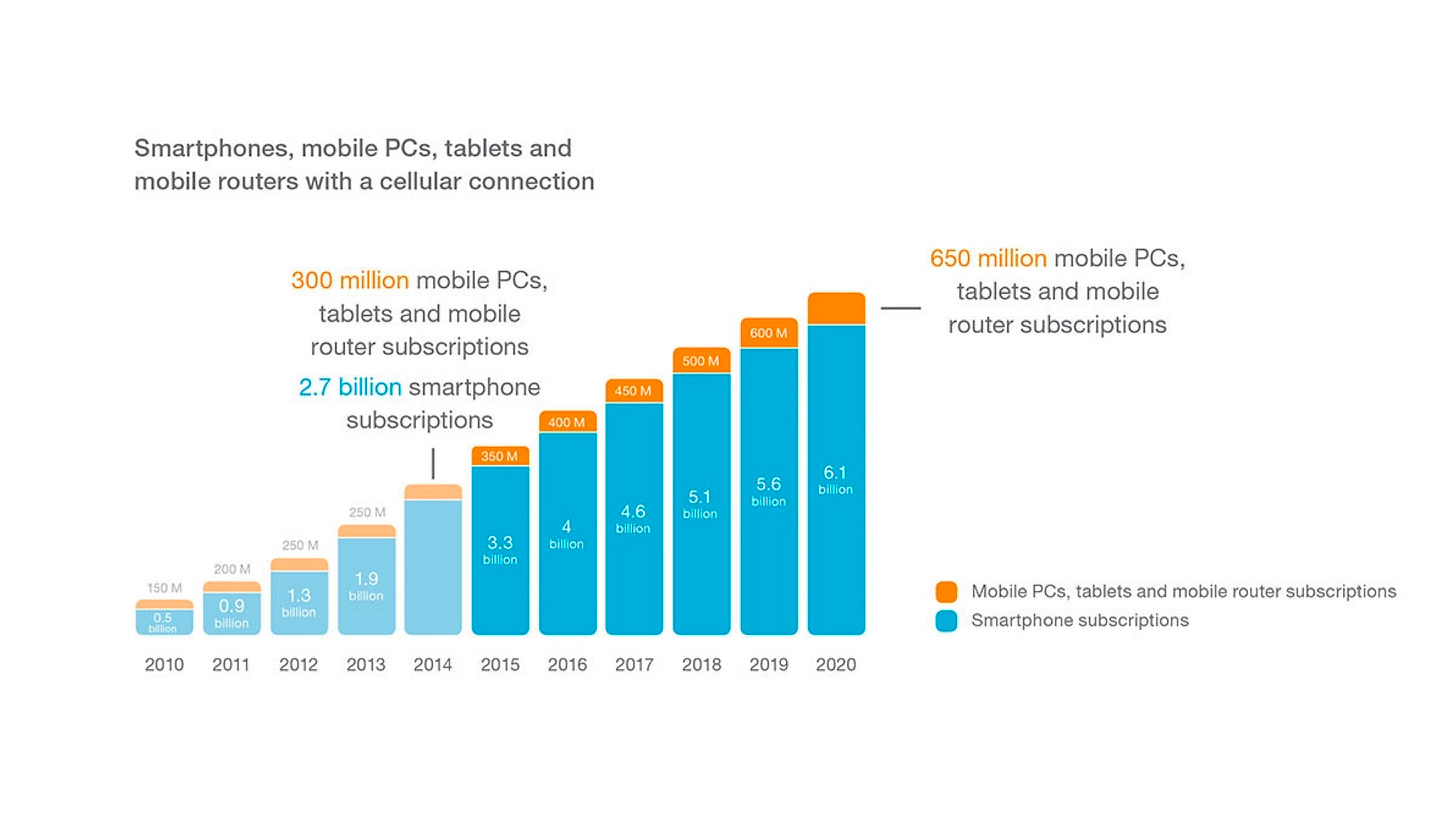

When we started Percolate in 2011, the trajectory of mobile phones, social media, and their combined effect on marketing was just in its infancy, but it was clear what the curves would look like. It was obvious those new channels would fundamentally reshape digital and, in turn, all marketing as the available eyeballs exploded.

But AI doesn’t seem to be working quite the same way. That’s not to say it won’t have as large an impact—I think it will—but that it’s not at all clear exactly how or when that impact will appear.

Part of the reason for that is that so much of the way AI works runs counterintuitive to the way that we have trained ourselves to understand things. Take, for instance, integrations. For the last 15 years, a major part of the companies I’ve built has been integrating the tools we’ve made with other systems. In order to make that work, you need to follow the specs of the larger player. So at Percolate, when we integrated with Facebook, Twitter, or Salesforce, we followed their rules to a T—lest the integration simply wouldn’t function. This makes sense: computers rely on data being sent and received in a consistent manner to work correctly. But when I got a chance to build a ChatGPT plug-in, I was very surprised to find out that they had flipped the integration model. Instead of having the platform define the way data must be sent and received, the integrator describes it. This is possible because they’re using AI as a translation layer—a fuzzy interface between systems.

I’ve struggled to find a perfect analogy for this because it runs counter to so much of how the world works. It would be like IKEA selling you a box or materials and then giving you build instructions when you got home and told them what you wanted. Or like a universal fitter that could connect any two pipes, but could also make hot water cold and cold water hot. When I called a friend to help me come up with an analogy here, he told me a story of being in Afghanistan with the Army and needing new parts for their Humvees. In lieu of waiting on the supply chain or the DOD’s slow, pork-filled bureaucratic supply chain, they brought in a 3D printer capable of generating any part they needed on demand.

None of these analogies are perfect, but that’s the point! This is super hard to analogize. The physical and digital world explicitly defines how things should interact; if something else happens, they break down or error. But AI is perfectly fine with ambiguity.

Again, if you’ve spent your career doing things one way, it’s very hard to flip your brain to think about how it could work in a practically opposite manner.

And I’m not alone in feeling that. People who are much closer to the tech are saying much the same thing. Miles Brundage, who heads up policy research at OpenAI, tweeted in January that “The value of keeping up with AI these days is not so much detailed foresight but being able to confidently detect when others are too confident, and that things are more uncertain than anyone lets on. Kinda like that line about philosophy being a defense against bad philosophy.”

It’s been rattling around in my head ever since. Spending lots of time with AI doesn’t give me a particularly tuned forecasting ability (except that it will have a major impact). Still, it gives me quite a bit of insight into when people are overconfident in their forecasts.

Video

I go on from there to talk about tinkering (building “fingerspitzengefühl”) and go through a few of my experiments and what I’ve learned from them.

I hope you enjoy it and find it interesting. I’ll be sharing more videos as I get them up on YouTube. As always, feel free to be in touch if there’s anything you want to talk about or I can help with.

Also, a request: I am working on a series of events for the fall in partnership with various brands. As part of that, I am curating a set of AI companies to come present to those companies and am looking to schedule as many product demos as possible. If you fit into this and are interested in getting in front of hundreds of hungry marketers, can you be in touch to schedule a demo?

Thanks,

Noah