Fiverr, The Term "Generative AI", and More // BrXnd Dispatch vol. 007.5

Who is actually at risk of getting disrupted, plus lots more links

Hi everyone. Welcome to the BrXnd Dispatch: your bi-weekly dose of ideas at the intersection of brands and AI. The .5 edition is a quick roundup of links and thoughts. I hope you enjoy it and, as always, send good stuff my way, and please share this with others who you think might appreciate it. - Noah

[LinkedIn] Fiverr, the freelance marketplace, ran an ad about AI in the New York Times. You can read the whole thing below, but the gist is that it’s an “open letter” to AI in which the company explains that it thinks humans and AI are best paired together. “I believe that's where our freelancers can help by giving you some personality, leading to better results. When we work together, wonderful things happen, as exemplified by this letter that you helped write. Thank you, by the way. Look at us, we're already finishing each other's sentences. Literally.”

The ad is … fine. But more interesting to me is the focus on creative output. In many ways, I think too much ink is being spilled right now on how these tools will disrupt human creativity. While I certainly believe they can produce amazing results, the threat to Fiverr isn’t so much the writing or images being created, but rather the basic data work that so many people use Fiverr and Mechanical Turk for that can now be performed more quickly and cheaply with AI. My post about using Typescript interfaces as prompts shows the power of these models to do basic data extraction and organization. While writing and other creative endeavors are certainly on the horizon, and it definitely makes for a better headline and ad, the immediate disruption of human work by these machines is going to mostly in work that happens in spreadsheets, I suspect.

[YouTube] I’m off in Montana this weekend, teaching my yearly class at the University of Montana’s Entertainment Management program. As part of it, I will be discussing—shockingly—AI (and more specifically, how I approach building an intuition for a new space like this). Anyway, we’re going to be doing some exercises in class (which I will share after), and my friend Nick sent over this great Vox explainer about diffusion models that I plan to share:

[AI Snake Oil] This December post from AI Snake Oil on what LLMs are good at is definitely worth a read. Here’s their short list of tasks:

Tasks where it’s easy for the user to check if the bot’s answer is correct, such as debugging help.

Tasks where truth is irrelevant, such as writing fiction.

Tasks for which there does in fact exist a subset of the training data that acts as a source of truth, such as language translation.

[Twitter] Sam Altman doesn’t like the term “generative AI”:

[Substack] Rob Schwarz and Steve Bryant both wrote about uncreative.agency, which was an experiment in generating advertising ideas via AI.

[Twitter] Kevin Kelly perfectly summarizes the issue facing AI projects from large companies:

It also ties in with this excellent Washington Post piece, “Big Tech was moving cautiously on AI. Then came ChatGPT.” This narrative seems much more reasonable to me than the “Code Red” Google story. These guys didn’t get caught by surprise by AI. They’ve been working on it for ages. They decided to keep it locked up until they had more certainty over its output and effects and have now had to reconsider that position with the aggressive posture of OpenAI et al.

[Forbes] David Armano wrote a piece about AI and marketing for Forbes with a nice shoutout for BrXndscape, my marketing AI landscape.

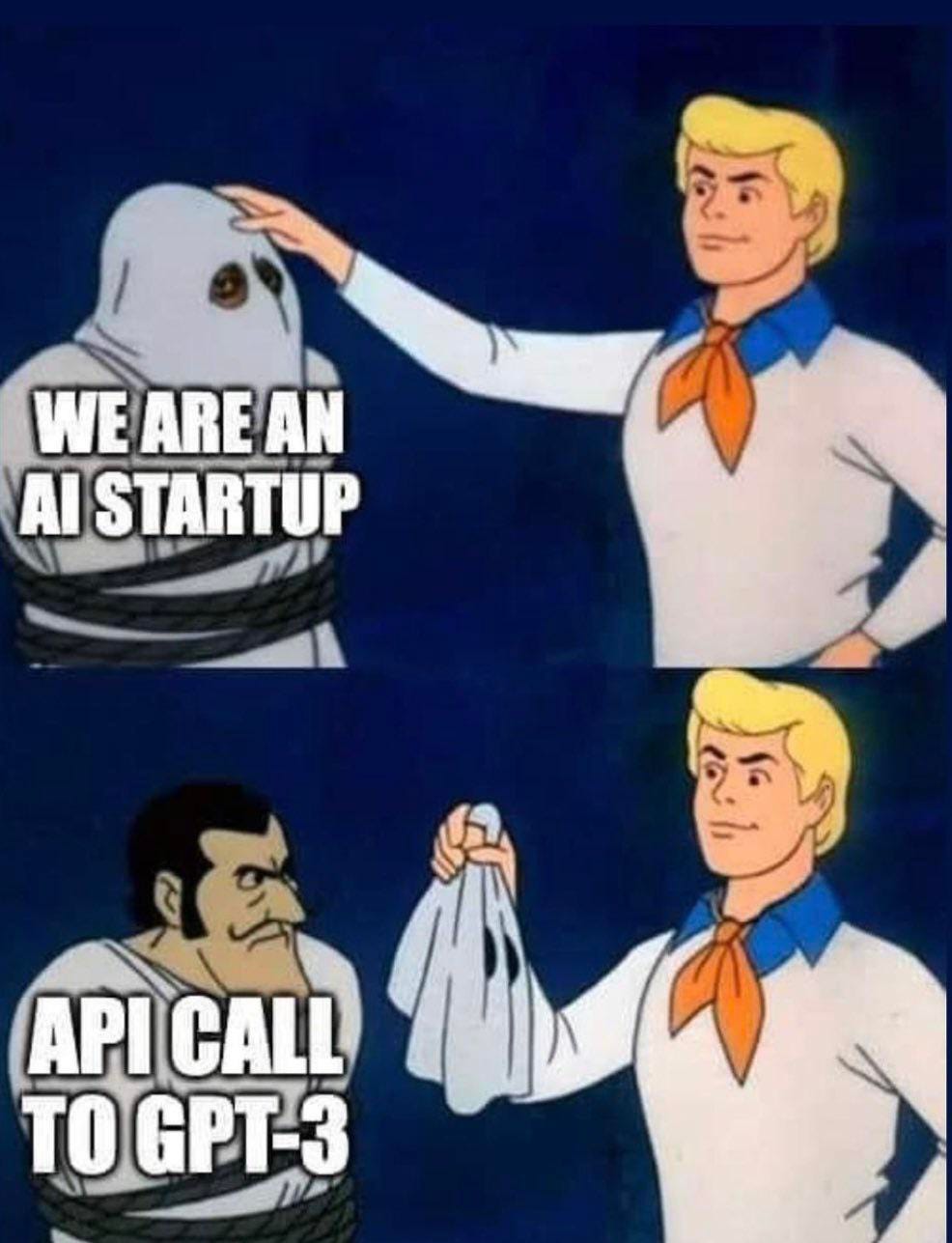

[Reddit] Sometimes, an image says it all …

That’s it for this week. Thanks for reading, and please send over any links you think are worth reading. Also, while I have you, I’m actively looking for sponsors for my BrXndCon event in mid-May in NYC. If you’re interested, please reach out.

— Noah