Hallucinations! (for Fun and Profit) // BrXnd Dispatch vol. 025

On hallucinations and the UX of LLMs.

You’re getting this email as a subscriber to the BrXnd Dispatch, a (roughly) bi-weekly email at the intersection of brands and AI. I am working on finalizing SF Conference dates and should have those in the next few weeks. As always, if you have any questions, you can reply to this email or send me a note.

Hi there, back again for another fun edition of the newsletter as I get some more of the videos uploaded from May’s NYC conference. Today’s talk comes from Tim Hwang. Tim is one of the most brilliant people I know (on nearly any topic—be sure to ask him about tarot and dredging) and has a deep background in the world of AI and machine learning, having spent time at both Google and Harvard focused on policy and ethics of AI. He gave the second talk of the day, right after mine, about building intuition with AI, and I thought it was a perfect build on some of the things I talked about. (Full video at the bottom.)

Tim’s talk was aptly titled Hallucinations! (For Fun and Profit) and was a dive into just what hallucinations are, why the way we view them is partly a function of the UX of chat, how we can harness hallucinations in marketing as a feature rather than a bug, and some speculation on how different UX approaches might change our views of the ways LLMs respond.

Some Quick Housekeeping

In addition to the San Francisco conference, I am working on a series of events for the fall in partnership with various brands. As part of that, I am curating a set of AI companies to come present to those companies and am looking to schedule as many product demos as possible. If you fit into this and are interested in getting in front of hundreds of hungry marketers, can you be in touch to schedule a demo? Also, if you aren’t listed in the BrXndscape: Marketing AI Landscape, be sure to request to be added.

Before the video, I wanted to write up a few of the points that really resonated with me. Of course, you should watch Tim give the full talk, and I’ve posted the transcript on the site as well (an AI-generated version, at least).

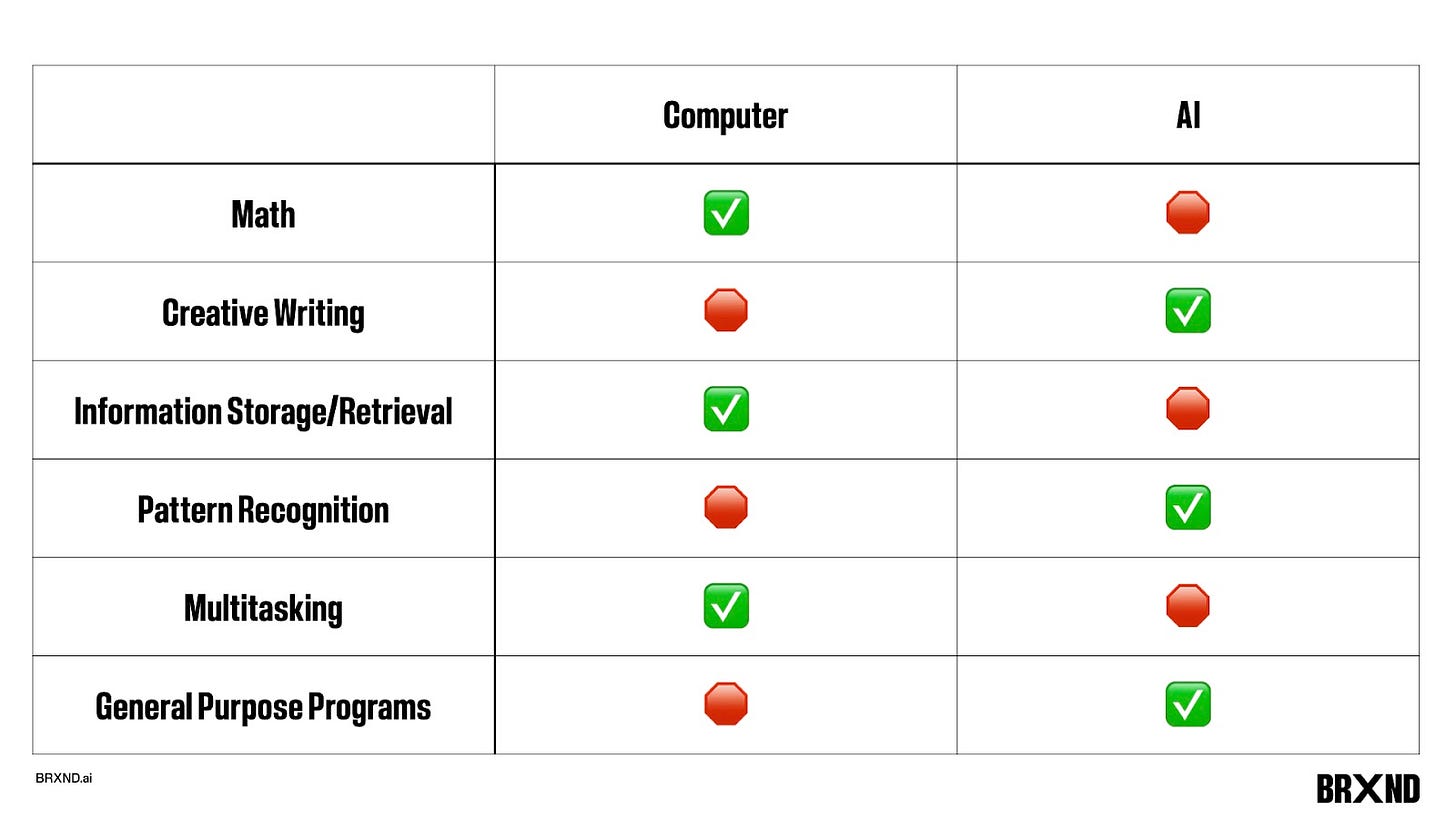

To start, Tim did a great job explaining where hallucinations come from, but his point that large language models (LLMs) are bad at everything we expect a computer to be good at and good at everything we expect a computer to be bad at that really hit me. In fact, after his talk, I put together this little slide to help share with others because I thought he summarized things so nicely:

Given this, Tim went on to explain just how odd it is that the industry heavyweights have decided to apply this tech to search first:

It’s in some ways kind of an absurdity—an incredible colossal irony—that the very first product that Silicon Valley wanted to apply this technology towards is search. So BARD is the future of Google search, and Bing chat is the future of the Bing search engine. But ultimately, these technologies are not good at facts; they're not good at logic. All of these things are what you use search engines for. And, in fact, there's actually a huge kind of rush right now in the industry where everybody has rushed into implementing this technology and been like, “This is really bad.” And basically, what everybody's doing right now is trying to reconstruct what they call retrieval or factuality in these technologies. The point here is that large language models need factuality as an aftermarket add-on. It isn't inherent to the technology itself.

The reason for that, as Tim eloquently articulated, is that “LLMs are concept retrieval systems, not fact retrieval systems.”

To that end, the rest of the talk explores how to take better advantage of this “concept retrieval” power and what kind of UX might better match the system’s strengths.

Specifically, he pointed out what it means for brands (something I wholeheartedly agree with):

So if LLMs are a concept retrieval system, let's now apply this to brands. After all, what is a brand anyway? A brand is basically a collective social understanding of what a company represents to society. And in this sense, all a brand is is what people think of a brand. What would people likely say when asked, “So what does Coca-Cola mean to you?” Probably something like, “I don't know, it's soda, the red color.” These are the most likely outcomes. And so what I want to argue to you today is that concept retrieval, what LLMs represent, may actually be incredibly applicable to brands because, in the past, how did you really get at what the essence of a brand is? Well, you've got these very slow mechanisms for doing so: I can have a bunch of people fan out across America and do surveys very slowly. We can do focus groups. I can hire a bunch of people at a branding agency to do some research and write kind of like corporate poetry about what my brand is. Basically, what LLMs are doing is, in some ways, perfect for identifying, exploring, and manipulating brands as a concept. And so the slogan is that hallucinations are a feature and not a bug. Actually, it turns out that the technology that we have here is not good for everything that Silicon Valley is using it for, but it may be ideal for exactly the kinds of things that people in marketing are using LLMs for.

(If you want more on how brands are the subtotal of thoughts people have about a brand, I highly recommend this piece from Martin Bihl, it’s one of my all-time favorite bits of marketing writing.)

With that, I’ll let you watch Tim’s talk in full:

I hope you enjoy it and find it interesting. I’ll be sharing more videos as I get them up on YouTube. As always, feel free to be in touch if there’s anything you want to talk about or I can help with.

— Noah