Long Weekend Projects, o3-mini, Chip Diplomacy // BRXND Dispatch vol. 067

Plus, the full BRXND LA agenda is live.

You’re getting this email as a subscriber to the BRXND Dispatch, a newsletter at the intersection of brands and AI.

Luke & Noah here. Hard to believe BRXND LA kicks off in Hollywood in a little over two weeks on 2/6. Our mission with this event is to help the world of marketing and AI connect and collaborate. This summit will feature world-class marketers and game-changing technologists discussing what’s possible today, including presentations from CMOs from leading brands talking about the effects of AI on their business, demos of the world’s best marketing AI, and conversations about the legal, ethical, and practical challenges the industry faces as it adopts this exciting new technology.

In case you missed it, we announced the full slate of speakers last week. It’s a stellar lineup, featuring top decision-makers from companies like Amazon, Airtable, Blue Diamond, Getty Images, PepsiCo, Zillow, and more.

Also, because some were unable to take advantage of it last week due to the wildfire situation, we are extending our 10% ticket discount for a few days longer. If you would like to join us—alongside ~200 leading voices in AI and marketing—we recommend registering before this deal expires on Friday, 1/24

What caught my eye this week (Noah)

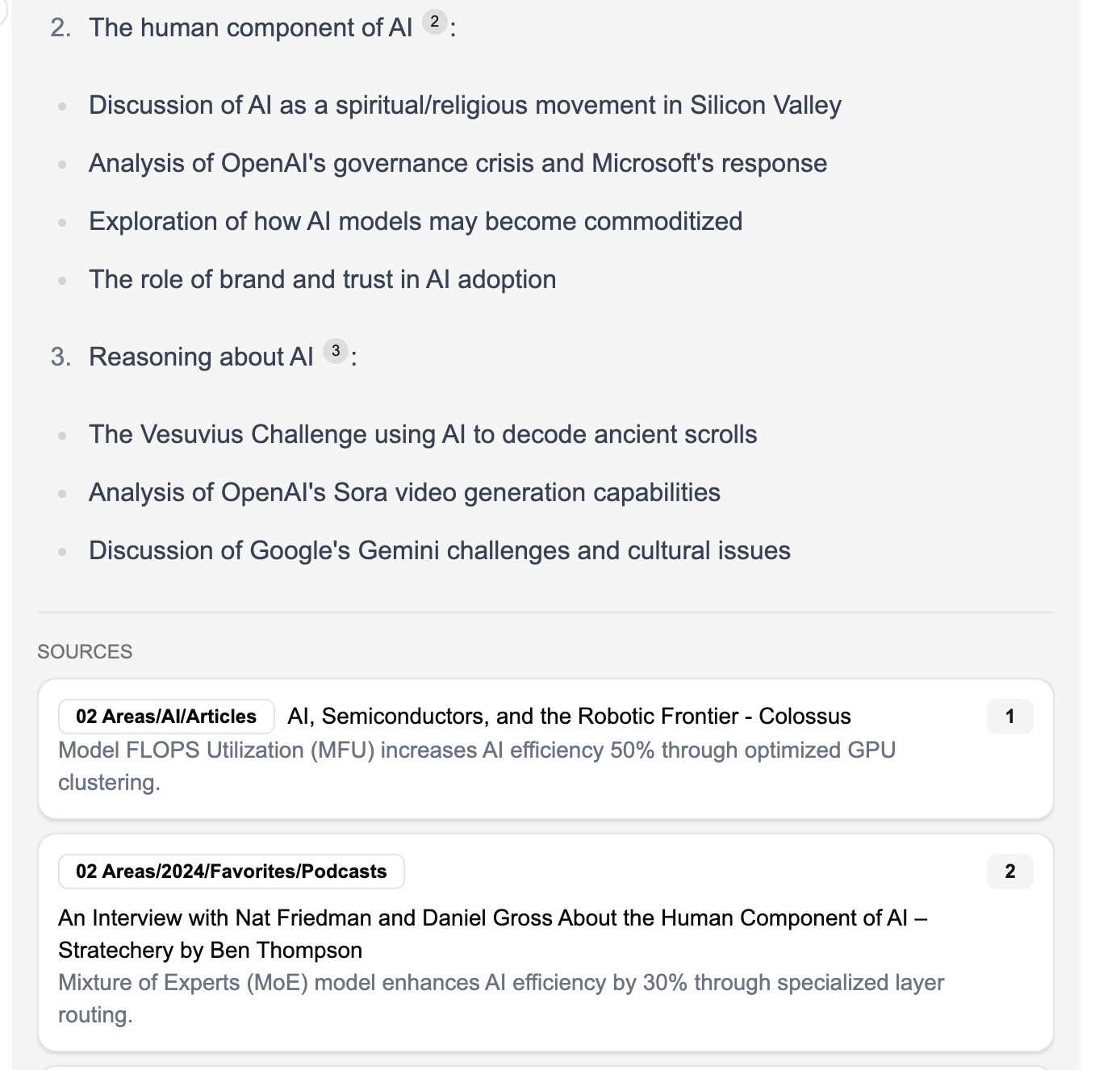

I have a bad tendency to start some crazy project every time a vacation or long weekend comes along. This past Saturday was no exception. I had the idea that I’d like to build a simple local AI tool that would interact with my local notes (Obsidian) and help me think through some writing (and help me write my talk for the conference). It was a chance to play some more with some of the new local models available on Ollama and also go deeper with some frontend stuff I’ve been wanting to play with. I spun up a new project and got coding. I’ve been playing with Vercel’s AI SDK, which essentially makes it easy to build dynamic UIs that interact with the models. Plus, I figured it would play nicely with v0.dev, Vercels’ AI interface design tool. I thought what I wanted was reasonably straightforward: footnoting like it works in ChatGPT and Perplexity. Something like this:

That’s an actual screenshot, so I eventually figured it out. But it took a crazy amount of time to get there. I was trying to use AI to help (vo.dev and Cursor), but I really wasn’t getting anywhere. I thought I was doing something wrong. I even tried feeding it all the documentation (via llms.txt), but there was still nothing. Then, finally, after what must have been about eight hours of banging my head against a wall, I carefully read the docs for the AI SDK and realized they had built-in handling for annotations. None of the AIs ever told me that, which in part is because it’s a pretty new thing, which means there wasn’t much training data, and partly because I didn’t know to ask for it. This is a clear limit to the current generation of models: even with web search, they’re limited in the new context they can add. But I knew that. Something in this example moved beyond that. I still have no idea why no system suggested I just pass the context back as annotations, which was built in from the start, but I felt like it was a small win for my humanity.

What caught my eye this week (Luke)

OpenAI’s first model release of 2025, o3-mini, is coming soon, with API and ChatGPT access slated to launch simultaneously. Sam Altman describes the latest reasoning model as “worse than o1 pro at most things, but FAST” and confirms the company is focused on shipping o3 and o3-pro next.

AI blogger Gwern describes the flywheel taking shape at OpenAI: “Every problem that an o1 solves is now a training data point for an o3 (eg. any o1 session which finally stumbles into the right answer can be refined to drop the dead ends and produce a clean transcript to train a more refined intuition…. If you’re wondering why OAers are suddenly weirdly, almost euphorically, optimistic on Twitter and elsewhere and making a lot of haha-only-serious jokes, watching the improvement from the original 4o model to o3 (and wherever it is now!) may be why.”

OpenAI’s “Operator” agent isn’t here yet, but for now there’s Tasks, a new beta feature in ChatGPT that allows users to schedule future actions and reminders.

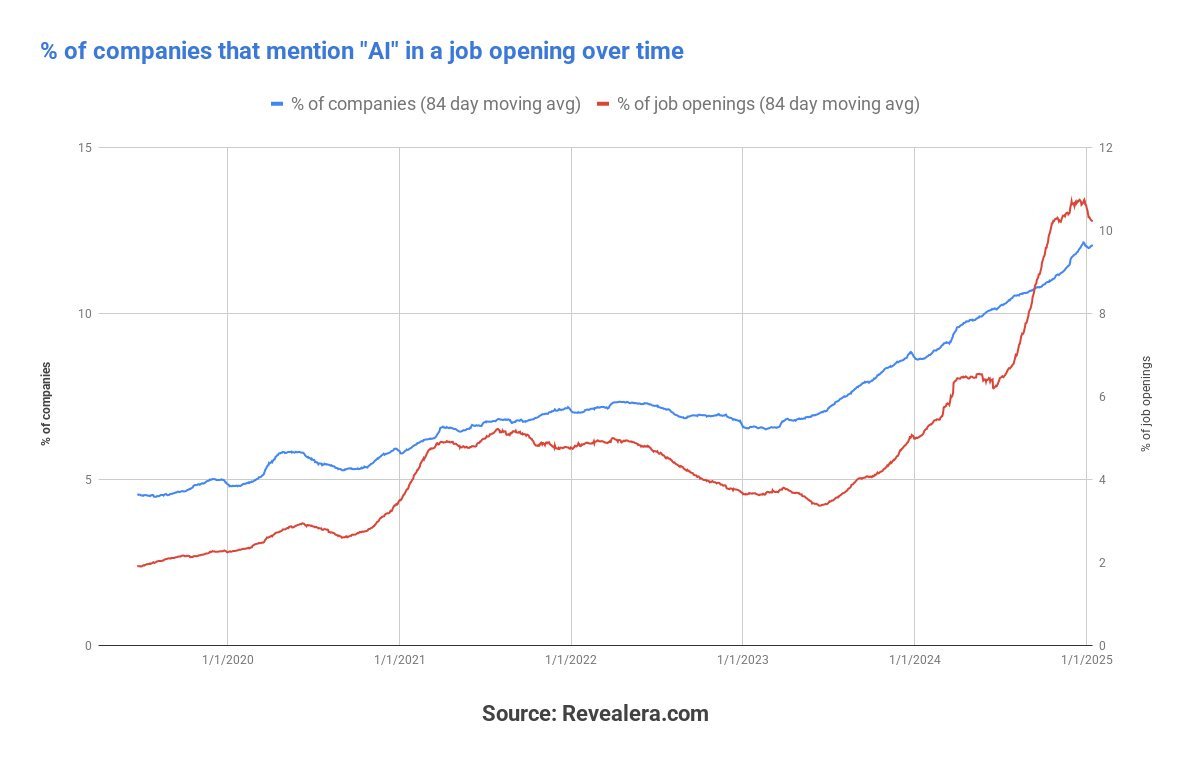

Is the AI hype cooling? Far from it. The number of companies that mention “AI” in a job posting is up 45% from a year ago.

This stat got me thinking about where AI actually creates value in organizations, and this quote from Goldman CEO David Solomon really crystallizes something important: Their teams can now use AI to draft an S-1 filing—traditionally a two-week project for six people—in just minutes, with about 95% accuracy. But here’s the kicker: “The last 5% now matters because the rest is now a commodity.”

This hits on something we’ve seen repeatedly: AI isn’t just about speed and efficiency—it’s about shifting where human expertise matters most. As the baseline work becomes more automated, the real value moves upstream to judgment and decision-making. Think about it like this: When tasks like writing code get cheaper and faster, the critical question becomes, “what should we build?”

Chip Huyen’s “Common pitfalls when building generative AI applications” is essential reading for engineers right now—she keeps the advice short and practical.

Given the recent changing of the guard in Washington, last week’s news that the US will start restricting the export of AI chips to a long list of rival powers, including China, may not ultimately amount to much. But if Trump decides to enforce Biden’s AI decree, the potential impact on global competition could be profound. US to world: “AI for me but not for thee.”

As always, if you have questions or want to chat about any of this, please be in touch.

Thanks for reading,

Noah & Luke

Have you tried the Obsidian community plugin 'smart connect'? It s a good base, but sometimes I feel it get stuck on the same notes.

Though if yours is available, I ll be happy to test it?