On Setting Expectations // BRXND Dispatch vol. 78

Plus, the Shopify memo, Llama 4, and why AV is now a marketing problem.

You’re getting this email as a subscriber to the BRXND Dispatch, a newsletter at the intersection of marketing and AI. We’re hosting our next BRXND NYC conference on September 18, 2025, and currently looking for sponsors and speakers. If you’re interested in attending, please add your name to the wait list. As space is limited, we’re prioritizing attendees from brands first. If you work at an agency, just bring a client along—please contact us if you want to arrange this.

Food for Thought (Noah)

Last week, we were presenting a project to a client, and I was reminded of something I’ve talked about a lot in person but never covered in depth in the newsletter. Basically, we were taking a process that is currently run relatively inefficiently with people and a bunch of spreadsheets, and using AI to build some automation around it. The goal was to give the client a tool that streamlines the whole process and lets them just make a final determination of go/no-go over the AI’s decisions. It’s not particularly creative, but it’s important (sorry, I’m trying to say as much as I can about the project without divulging anything sensitive).

The interesting wrinkle here is that we know the AI won’t get everything 100% right. So as we roll out our tool, it will be critical to communicate a more realistic target so people aren’t surprised when it returns a wrong answer. But it’s also important to benchmark this new AI-powered process against a realistic version of the old one. The existing human-and-spreadsheet setup probably lands around 70–80% accuracy. That should be our target number, not 100%, which the AI has no shot of reaching. Being honest about what you’re solving for and how well you currently solve it is critical if you hope to see organizational uptake.

What Caught My Eye This Week (Luke)

A leaked memo from Shopify CEO Tobi Lutke captures the inflection point many organizations are experiencing with AI: “Reflexive AI usage is now a baseline expectation at Shopify.”

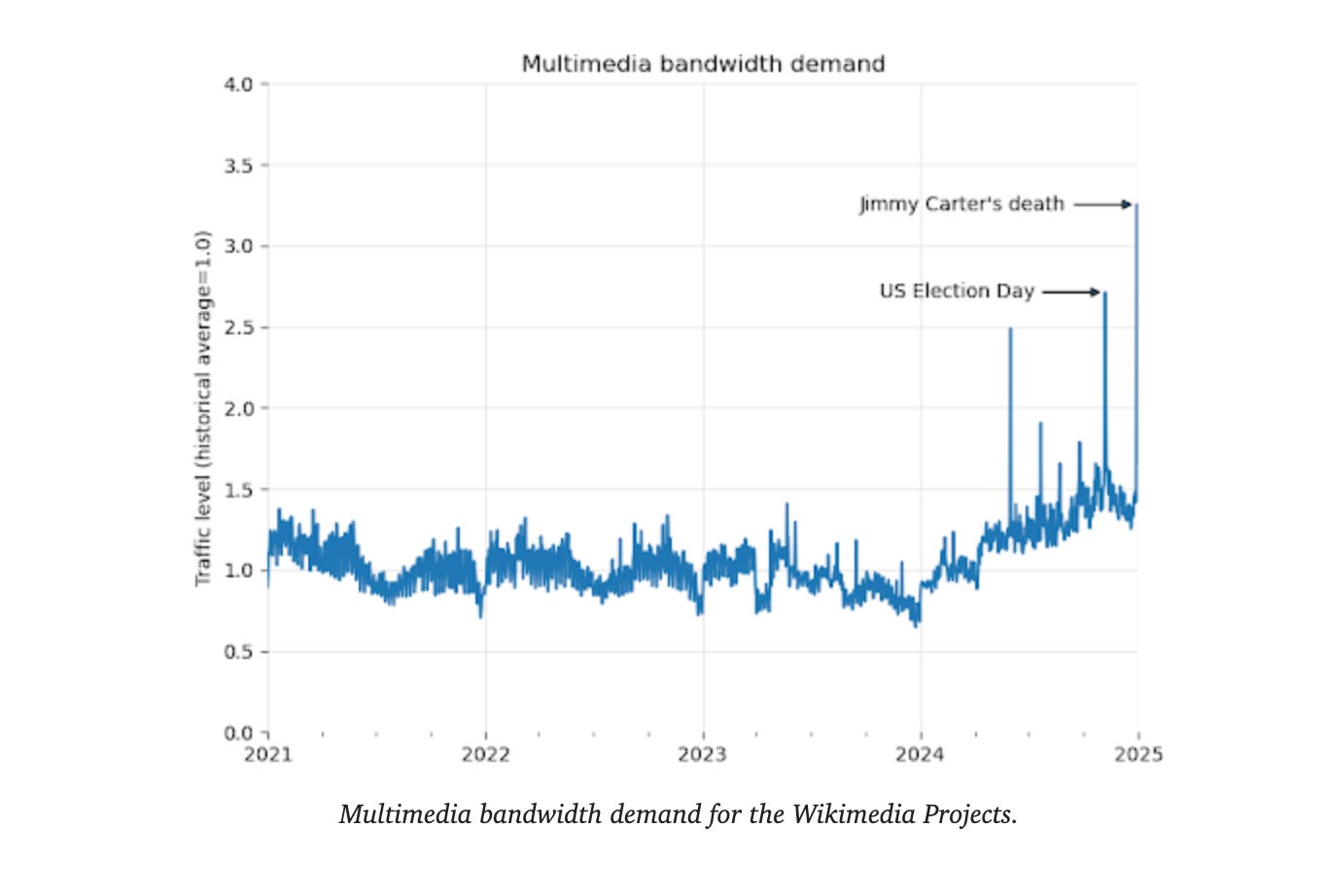

Wikimedia has seen multimedia bandwidth grow by 50% since 2024, with much of the surge attributed to bots scraping content to train AI models. (Random fact: More than any recent news event, Jimmy Carter’s death caused Wikimedia traffic to explode, apparently because so many people were watching his 1980 presidential debate with Ronald Reagan.)

From the NYT to Vox, hundreds of publishers are collectively lobbying the US government to take action to stop AI from training on copyrighted content. Who will rush in to fill the void left by legacy media? Conventional wisdom says producers of slop, but Noah’s not so sure (see: The Case for AI Content Optimism).

According to OpenAI, 130M ChatGPT users generated more than 700M images in the first week after the new image-generator feature was released. The tool is now available to everyone on the platform, although free users will be limited to three images per day.

Intel and TMSC are reportedly on the verge of a deal to form a joint chipmaking venture.

Microsoft is mulling job cuts for managers and non-coders as more Silicon Valley heavyweights try to decrease their “PM ratio,” which is the ratio of product managers to engineers.

YouTube shut off ad revenue for creators who make AI-generated movie trailers.

Vibe governing: Did the White House use AI to craft its tariff strategy? This is why good prompting matters.

The everything store is getting bigger. Amazon’s new agentic AI feature will allow you to buy products on other websites without leaving the mobile app.

Spotify rolled out AI-powered tools in its Ads Manager that let advertisers generate audio ad scripts and voiceovers.

OpenAI is fast-tracking the release of two new models—o3 and the lightweight o4-mini—within the next few weeks, while pushing back the release of GPT-5. The AI giant also announced it is releasing its first open language model in years. After DeepSeek caused global shockwaves and Meta’s Llama hit 1 billion downloads, it’s not surprising that, as Sam Altman put it, the company wants to "rethink" its open-source approach.

Speaking of which, Meta just released Llama 4, its latest family of open-weight models: Scout, Maverick, and Behemoth. On paper, the models seem technically impressive, but upon launch, Meta caught fire for gaming the benchmarks.

A blog post entitled “Why I Stopped Using AI Code Editors” is making the rounds, warning developers against letting their skills atrophy through overreliance on AI. While I don’t agree with the overall take, I was intrigued by the author’s comparison between coding with AI and driving with Tesla FSD—suggesting that both activities risk diluting human proficiency. Maybe it’s because I was just at Ride AI, an autonomous vehicle summit, but it got me thinking about a familiar prediction in the AV world: as the tech matures, “analog driving” won’t disappear — it’ll just become a hobbyist pursuit. The same might be true for pure coding in an AI-first future.

Semi-related to the above: Autonomous vehicles are now a marketing challenge.

If you have any questions, please be in touch. If you are interested in sponsoring BRXND NYC, reach out, and we’ll send you the details.

Thanks for reading,

Noah and Luke