The Reality (or Lack Thereof) of Agents // BRXND Dispatch vol. 72

Claude 3.7, Microsoft data centers, and NYT x AI

You’re getting this email as a subscriber to the BRXND Dispatch, a newsletter at the intersection of marketing and AI.

Luke here. Noah is traveling this week, so I’ll briefly remind everyone to save the date for our BRXND NYC conference on 9/18. If you’re interested in attending, you can leave your email here. If you’re interested in sponsoring or speaking, we’ve got forms for that as well.

For a taste of what to expect, you can now rewatch all the talks from our recent LA event on our YouTube channel. I actually want to spend part of today’s dispatch recapping a particularly interesting presentation about a massive topic in AI right now: the outlook for agents.

The Reality (and Lack Thereof) of Agents

If you’ve been keeping tabs on tech headlines lately, you’ve undoubtedly heard Jensen Huang and others proclaim that 2025 will be “the year of the agent.” It’s a catchy prediction that seems to be everywhere right now. The only problem is that the term “agent” continues to generate a ton of confusion, even among people who spend all day thinking about AI.

At our recent BRXND LA event, Lance Martin from LangChain offered a definition whose simplicity I appreciated: “a system that can perceive and act on the environment.” (Watch the full recording here).

Lance began by noting that the concept of AI agents that can navigate the web and perform tasks, like booking flights, has been around as long as the internet. In fact, his definition comes from a 1995 textbook.

Back then, agents were known as “softbots,” a reference to “robots,” which of course are systems that can perceive and act on the physical environment.

This led Lance to an interesting parallel about the evolution between robots and agents through the lens of environmental complexity, which helps explain where we are today and where we’re headed.

Highly Structured Environments: According to Lance, what makes environments “highly structured” is the complete absence of variability. The systems don’t need to make decisions; they just execute precise, predefined actions in environments that never change.

In the physical world: Think industrial robots on assembly lines. These machines operate in meticulously controlled settings where every variable is known. The robot arm in a car factory knows exactly where to weld because that spot never changes. Everything is predetermined, from lighting conditions to the exact positioning of parts.

In the digital world: This is equivalent to Robotic Process Automation (RPA) – brittle, hard-coded workflows. These systems work, but they’re extremely limited. As Lance explained, RPA sometimes relies on “predetermined pixel coordinates” for clicking. In other words, if the UI changes even slightly, the whole system breaks. There’s zero adaptability.

Semi-Structured Environments: What makes a domain “semi-structured” is the balance between flexibility and constraints. The system can handle some variation but still relies on guardrails.

In the physical world: This is where we see robots that can navigate factory floors or warehouses, for example. They might be able to adapt to an unexpected obstacle or slight variations in their environment, but they still operate within significant constraints. Think of Amazon’s warehouse robots that can navigate around humans but still work within a highly controlled setting with clear markers and predictable layouts.

In the digital world: This is exactly where we are today with AI workflows and agents. Lance highlighted how current AI systems can handle some variability but still require structured APIs and predefined paths. The workflows he described—transcribing meetings, summarizing content, updating databases, generating report—are powerful but still operate within a framework of known possibilities.

Unstructured Environments: What defines these “unstructured” environments is the absence of guardrails and the presence of unlimited variability. The system needs to understand context and intention at a much deeper level.

In the physical world: This is the domain of those humanoid robots that Elon Musk and others envision: machines that can navigate and operate in the same chaotic, unpredictable environments that humans do. Your living room, a crowded street, a construction site, these are unstructured environments where variables constantly change in unpredictable ways.

In the digital world: This is where we’re heading with tools like OpenAI’s Operator. Lance pointed out that the next evolution is digital agents that interact with computers exactly as humans do, using a mouse and keyboard, navigating changing UIs, and adapting to new applications without pre-built integrations. Instead of relying on APIs, these systems would observe screen pixels and interact with them directly, just as we do.

Lance suggested we’re at the beginning of a 10-year arc. While today’s workflows are already powerful, we’re moving toward a world where digital agents will be able to replicate virtually anything we do with a computer.

The big question for companies becomes: at what point will you actually allow an “operator-style” agent to handle customer support or manage your social media?

What Else Caught My Eye This Week

A new Age of Reason(ing) is dawning. Following the release of Grok 3 last week and Claude 3.7 Sonnet yesterday, nearly all the major AI labs now offer reasoning models: a type of LLM that works through a “chain of thought” process to get more accurate results. Anthropic’s twist on reasoning—a “hybrid” model that can answer in real-time or provide more thought-out responses—is unique, but may not be for long. Sam Altman has already said that OpenAI will adopt a similar approach for GPT-5. What we’re witnessing, according to AI watcher Charlie Guo, is the convergence of LLMs around a handful of core features and products, such as web search, browser automation, and software development. The near-term outcome will likely be more emphasis on product rather than research.

Anthropic’s other big release, an agentic coding tool called Claude Code, is being pitched as “an active collaborator that can search and read code, edit files, write and run tests, commit and push code to GitHub, and use command line tools.” (Related: a16z on the explosion of new products that allow users to build apps and websites with AI, aka “vibe coding.”)

OpenAI just announced Deep Research (which previously cost $200/month) will be rolled out to ChatGPT Plus, Team, Edu, and Enterprise users.

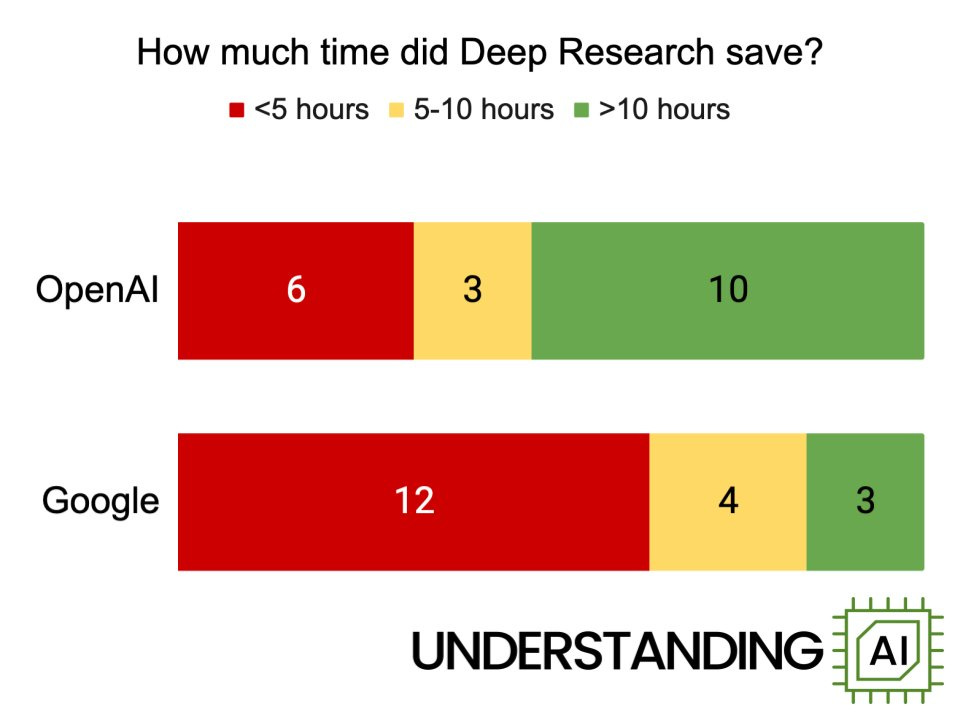

Meet the Pepsi Challenge for Deep Research. In a blind “taste test,” a panel of subject-matter experts saved more time using OpenAI’s research assistant than Google’s version.

The NYT is greenlighting the use of AI to write social posts, SEO headlines, and some code. While you can imagine this sort of effort encountering backlash, it’s striking to see the largest legacy publisher acknowledge that AI can have a role in the newsroom.

After pledging to put $80B into computing capacity, Microsoft is now backpedaling on some of its data center leases, raising questions on Wall Street about whether AI capex—and by extension, demand—is still going vertical.

Author Steven Johnson revisits Herbert Simon’s ground-breaking 1971 lecture on the “attention economy,” framing LLMs as “information condensers” for our era of data abundance.

How OpenAI’s first Super Bowl ad came together.

That’s it for now. Thanks for reading, subscribing, and supporting. As always, if you have questions or want to chat, please be in touch.

Thanks,

Luke

This is such an insightful take on the evolution of AI agents. It's fascinating how the concept has progressed from "softbots" in the '90s to what we’re seeing now with systems like OpenAI’s Operator. I love Lance Martin's definition "a system that can perceive and act on the environment". It's so clear and direct, yet opens the door to so much exploration.