Inverting the Adoption Curve // BRXND Dispatch vol. 79

Plus, don't forget to sign up for the BRXND NYC conference on 9/18.

You’re getting this email as a subscriber to the BRXND Dispatch, a newsletter at the intersection of marketing and AI. We’re hosting our next BRXND NYC conference on September 18, 2025, and currently looking for sponsors and speakers. If you’re interested in attending, please add your name to the wait list. As space is limited, we’re prioritizing attendees from brands first. If you work at an agency, just bring a client along—please contact us if you want to arrange this.

What Caught My Eye This Week (Luke)

This week, Andrej Karpathy, popularizer of the term “vibe coding” and all-around giant of the AI world, wrote an interesting post on X about the spread of AI. In it, he makes the observation that LLMs have inverted the typical technology adoption curve. Almost every transformative technology in history has followed a top-down diffusion path, starting with governments and military, moving to corporations, and only later reaching regular people. But with LLMs, we’re seeing the opposite: Currently, it’s ordinary people who are disproportionately reaping the benefits.

This post reminded me of the hallway chatter at the BRXND LA conference earlier this year. What we heard from executives at large organizations is that they’re often struggling with how to implement AI effectively, while their employees are already using ChatGPT daily. This creates tension where the people inside the company often have more hands-on experience with the technology than the organization itself.

Why is this happening? It’s easy to blame bureaucracy—and while that’s certainly a factor—we should recognize that large organizations often have good reasons for moving cautiously. The more compelling explanation comes down to a question of relative impact.

Karpathy argues that, for a corporation, AI might make existing workflows and processes only marginally more efficient: “[A]n organization’s unique superpower is the ability to concentrate diverse expertise into a single entity by employing engineers, researchers, analysts, lawyers, marketers, etc. While LLMs can certainly make these experts more efficient individually (e.g. drafting initial legal clauses, generating boilerplate code, etc.), the improvement to the organization takes the form of becoming a bit better at the things it could already do.”

But for individuals, AI allows them to do things they simply couldn’t do before, whether that’s coding, writing, analyzing data, or understanding complex research. The multiplier effect is much more dramatic.

Where I think this phenomenon gets particularly interesting is around this idea of “quasi-expert knowledge” across domains. Karpathy writes that the value of AI isn’t that it’s the world’s best copywriter or strategist—it’s that it’s a pretty good copywriter AND strategist AND analyst AND researcher. That versatility is transformative for individuals, but more incremental for organizations that already have specialists in each domain.

My Reaction (Noah)

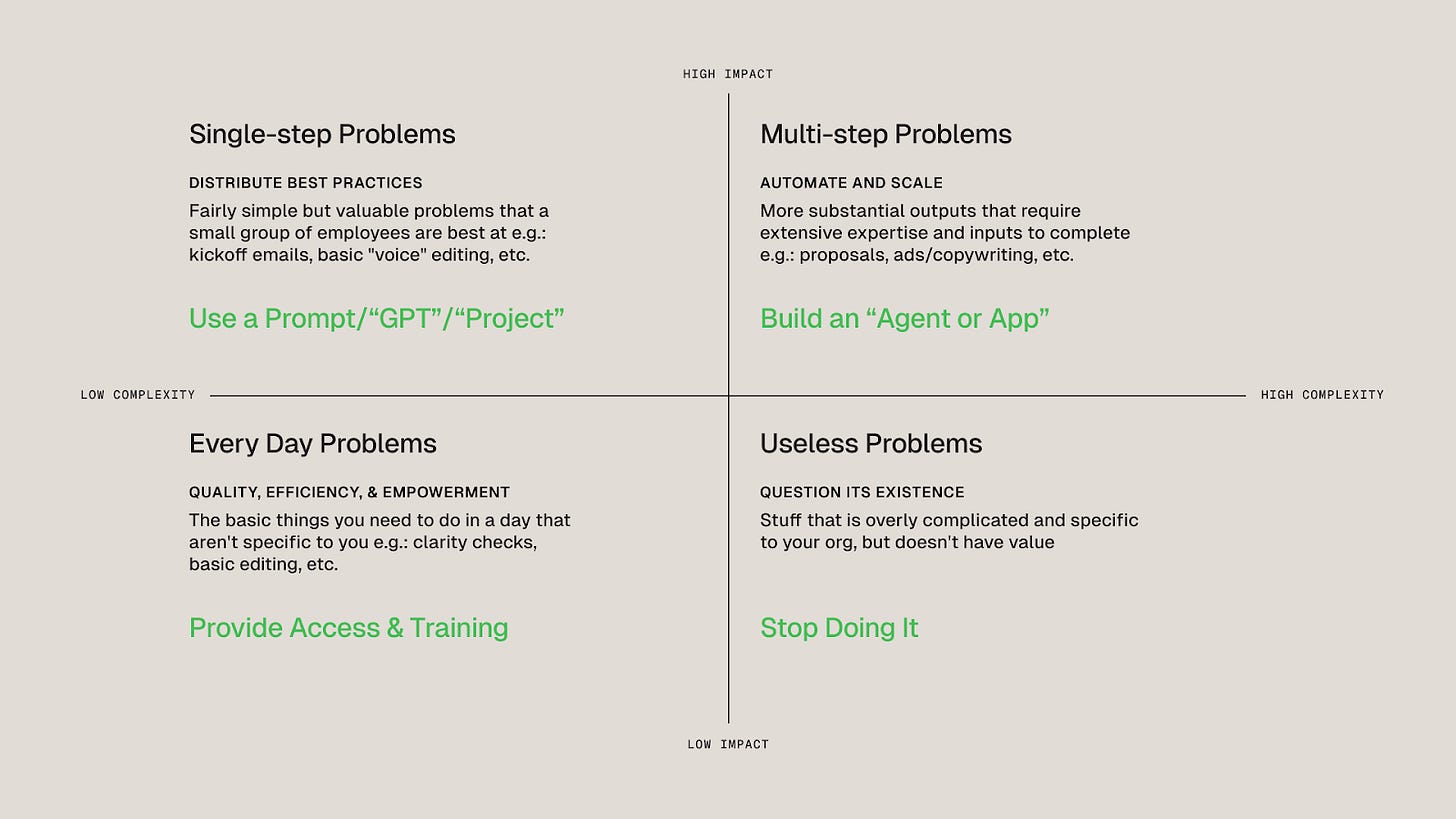

One thing I’d say is that while organizations do have a lot of those specialists, they’re not widely distributed. One use of AI that I think is massively underhyped is its ability to “raise all boats” in an organization by distributing the best skills of a few with specific expertise to many others. This is how I always thought about the top left box in this 2x2 I made. It represents the ability to distribute best practices in the form of GPTs/Gems/Apps/Projects/whatever you want to call them to the broader organization. If you have someone who is particularly good at writing kickoff documents, being able to distribute a synthetic version of them reading through a kickoff plan has a tremendous amount of value.

The other thing Luke’s thoughts made me remember is this super interesting comment from the Dwarkesh Patel podcast on the 2027 project:

Yeah. I had this interesting experience yesterday. We were having lunch with this senior AI researcher, probably makes on the order of millions a month or something, and we were asking him, “how much are the AIs helping you?” And he said, “in domains which I understand well, and it’s closer to autocomplete but more intense, there it’s maybe saving me four to eight hours a week.”

But then he says, “in domains which I’m less familiar with, if I need to go wrangle up some hardware library or make some modification to the kernel or whatever, where I know less, that saves me on the order of 24 hours a week.” Now, with current models. What I found really surprising is that the help is bigger where it’s less like autocomplete and more like a novel contribution. It’s like a more significant productivity improvement there.

The real accelerator of these models is the ability to amplify extraordinary people by many times their current capabilities.

What (Else) Caught My Eye This Week (Luke)

OpenAI just released three new developer-centric, API-only models: GPT-4.1, GPT-4.1 mini, and GPT-4.1 Each of the three models handles 1 million token contexts while delivering typical query costs 26% lower than GPT-4o. Here’s everything you need to know.

Sam Altman at TED: OpenAI’s user base doubled in the past few weeks. “10% of the world now uses our systems a lot.”

Here’s an in-depth breakdown of what Trump’s new tariffs mean for AI infrastructure. With import fees as high as 145% on Chinese products threatening to disrupt global trade networks, tech giants are already finding loopholes, such as routing semiconductors through Mexico and Canada under USMCA.

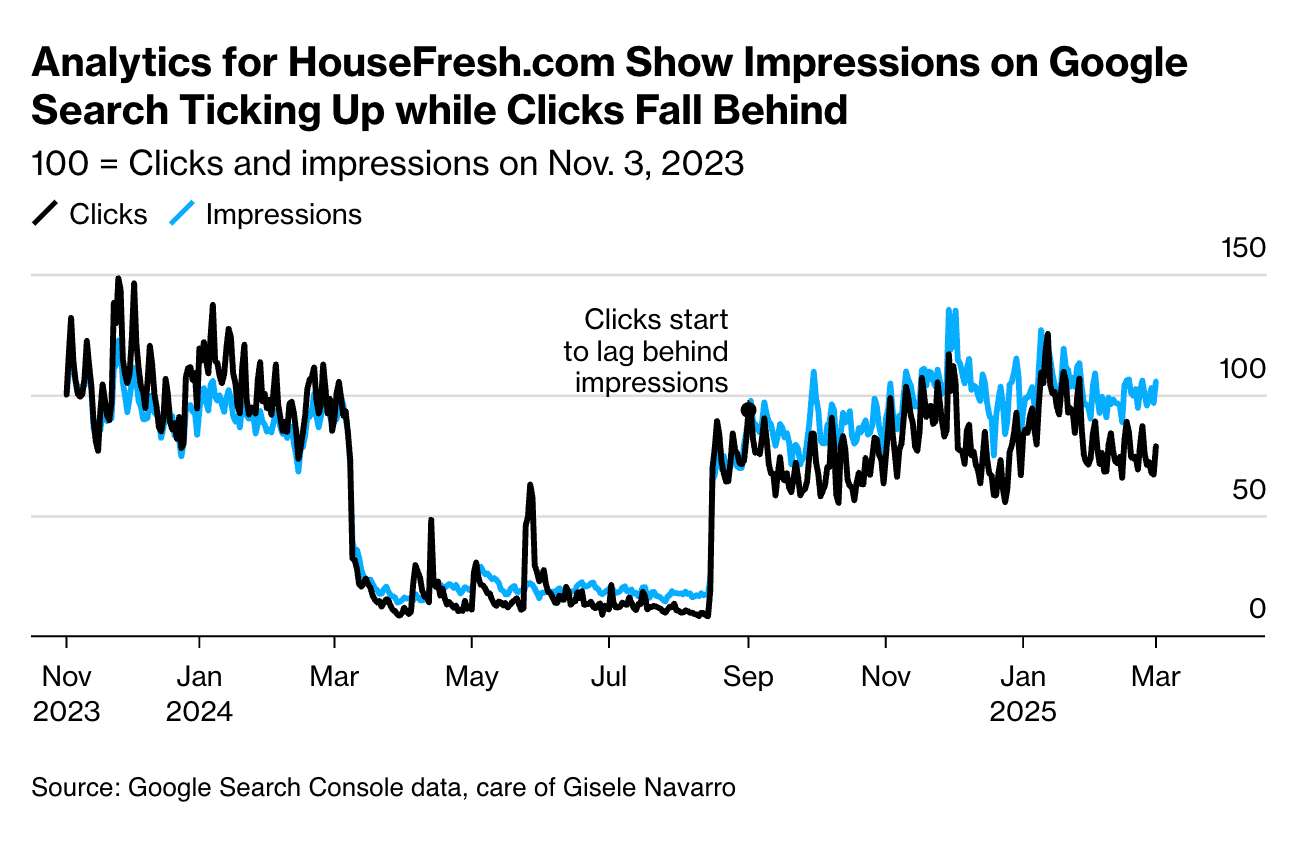

Fewer clicks, but more impressions. Publishers say Google Overviews—those AI-generated summaries that appear above traditional Google Search results—is killing their traffic, even though in some cases, it is exposing more people to their content.

Snap is rolling out Sponsored AI Lenses for brands.

CAC $0: AI code editor Cursor hit 1 million DAUs without spending a single dollar on marketing.

Why you need to be creating permanent instruction documents for the AI within your coding projects: “Imagine you’re managing several projects, each with a brilliant developer assigned. Here’s the catch: every morning, all your developers wake up with complete amnesia. They forget your coding conventions, project architecture, yesterday’s discussions, and how their work connects with other projects… What would you do to stop this endless cycle of repetition? You would build systems!”

Total recall: ChatGPT can now reference all your past conversations, a massive potential improvement for personalization and iteration. In addition, Elon Musk’s xAI rolled out its own memory feature this week.

YouTube is expanding its “likeness” detection technology, which detects AI fakes. Meanwhile, the AI deepfake bill NO FAKES has been reintroduced in Congress, with support from OpenAI, YouTube, and more.

GitHub has rolled out “premium requests” for Copilot, introducing rate limits for users accessing advanced AI capabilities. While subscribers retain unlimited access to the standard model (OpenAI's GPT-4o), interactions with more powerful models like Anthropic’s Claude 3.7 Sonnet will now face usage restrictions

Complementing its suite of design tools for marketers, Canva just introduced an AI code generator similar to v0, Cursor, and Bolt.

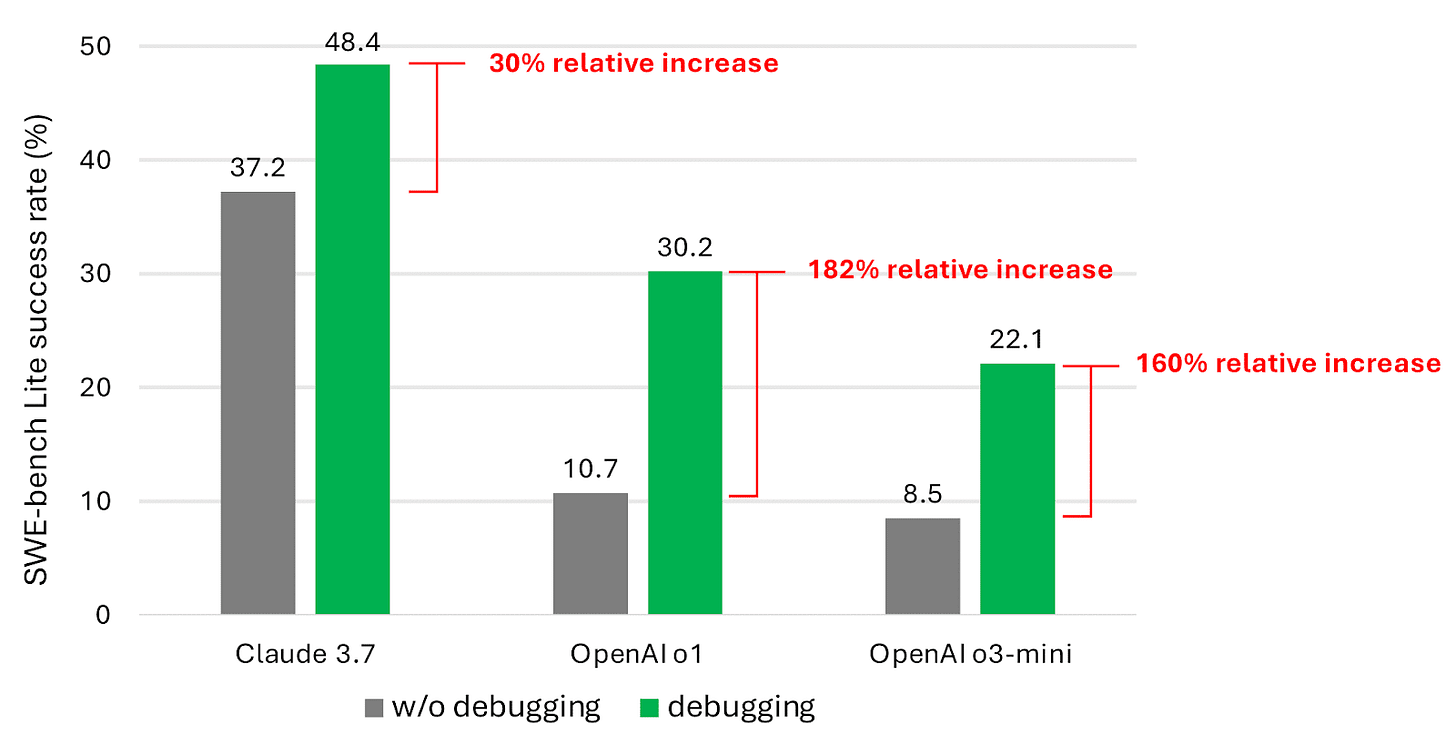

Microsoft Research released a study showing that even the most advanced AI models still struggle with everyday debugging tasks. In tests using nine different LLMs on 300 debugging challenges, the best-performing model solved less than half the issues. This is why linting is so important.

If you have any questions, please be in touch. If you are interested in sponsoring BRXND NYC, reach out, and we’ll send you the details.

Thanks for reading,

Noah and Luke