LLMs Are Killing Confusion Commerce // BRXND Dispatch vol 98

The (possible) end of 14-click shopping, AI breaks the "more money makes worse software" rule, and early Gemini 3 notes

You’re getting this email as a subscriber to the BRXND Dispatch, a newsletter at the intersection of marketing and AI.

Happy Tuesday! Thanksgiving week means an early Dispatch. Here’s what’s been making the rounds in our Slack this week, plus some Gemini 3 experiments.

What caught our eye this week

MoffettNathanson analyst Michael Morton breaks down why LLMs will reshape e-commerce. He splits e-commerce into “push” (Instagram or TikTok shows you a thing) and “pull” (you search for a thing). Push is fine, and pull is the problem. Morton actually tested this: searching “best running shoes for flat feet” on Google shows one correct answer out of six ads. ChatGPT gets it right 60-80% of the time. Right now Amazon profits from confusion—people click 14+ products before buying. But what happens when ChatGPT gives us the answer in one go?

Martin Casado argues that AI fundamentally changes software companies’ relationship to capital. The “bitter lesson”—that compute beats clever algorithms—means small teams can now turn massive funding into working products faster than ever before. This breaks the old software rule that throwing money at projects makes them worse. AI projects are taking far more capital, using far smaller teams, and generating historic growth rates. Traditional software faced talent constraints and complexity limits. AI projects face capital and GPU constraints. We’re entering an era where AI companies can productively deploy huge amounts of capital early, leading to unprecedented growth rates and burn rates that would have destroyed traditional software startups.

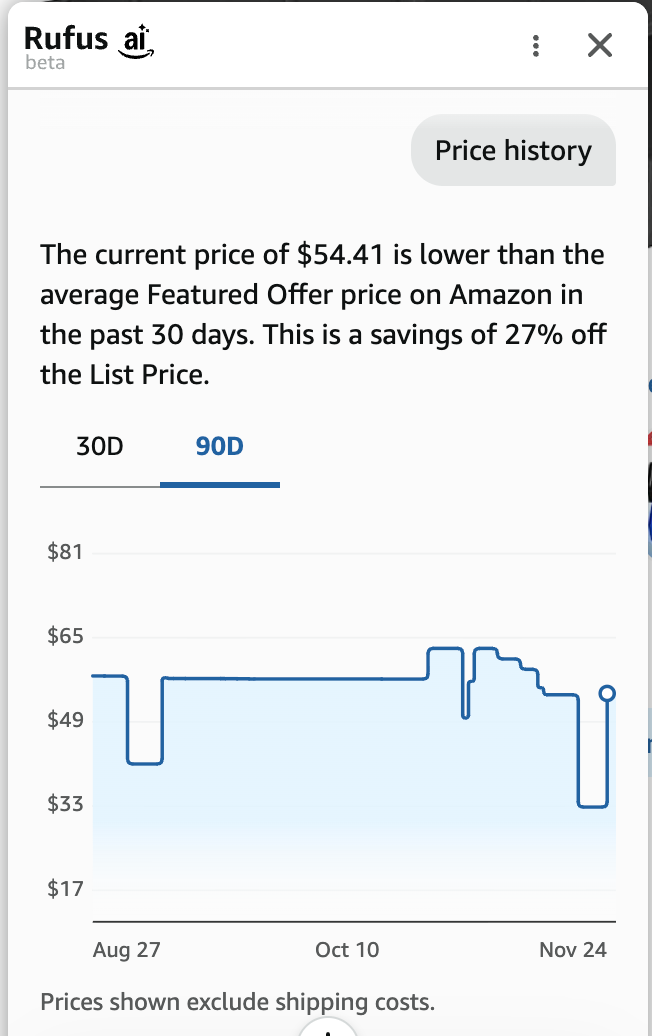

Amazon’s Rufus AI assistant now shows 30- and 90-day price history on any product—just ask “show me price history.” You can even set price alerts to get notified when something hits your target price, and Prime members can enable auto-buy to have Rufus automatically purchase it when the price drops. This is Amazon acknowledging that price transparency matters, even if it cuts into their dynamic pricing advantage. Rufus also handles handwritten grocery lists now (snap a photo, it adds items to cart), searches across other retailers with “Buy for Me” buttons, and has account memory so it remembers if you, for example, have a golden retriever that sheds.

Prompt engineering is becoming an artistic practice, according to Danny Oppenheimer. His piece in the MIT Press argues that crafting prompts is less about precision and more about interpretation, collaboration, and aesthetic judgment. As AI systems get more capable, the person writing the prompt becomes the curator and director, not the technician. We’re defining a new creative medium where the artist’s tool is language itself and the canvas is probabilistic.

Philipp Schmid breaks down what works when prompting Google’s newly released Gemini 3 model. Google just shipped Gemini 3 with significant improvements over previous versions, and early testing shows it handles prompts differently than other models. Be specific, use examples, and structure requests with clear delimiters. Gemini 3 responds better to conversational tone than rigid instruction formats. If you’re building on Google’s models, this guide will help you get better performance without fine-tuning.

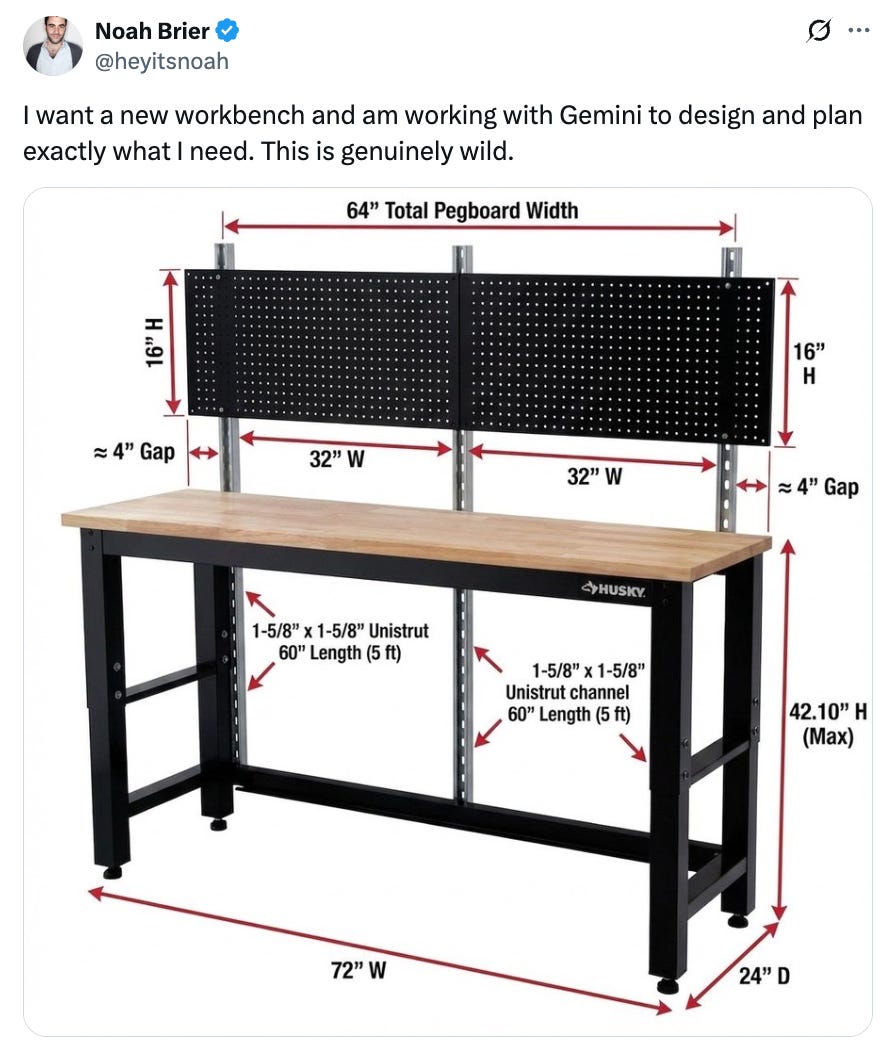

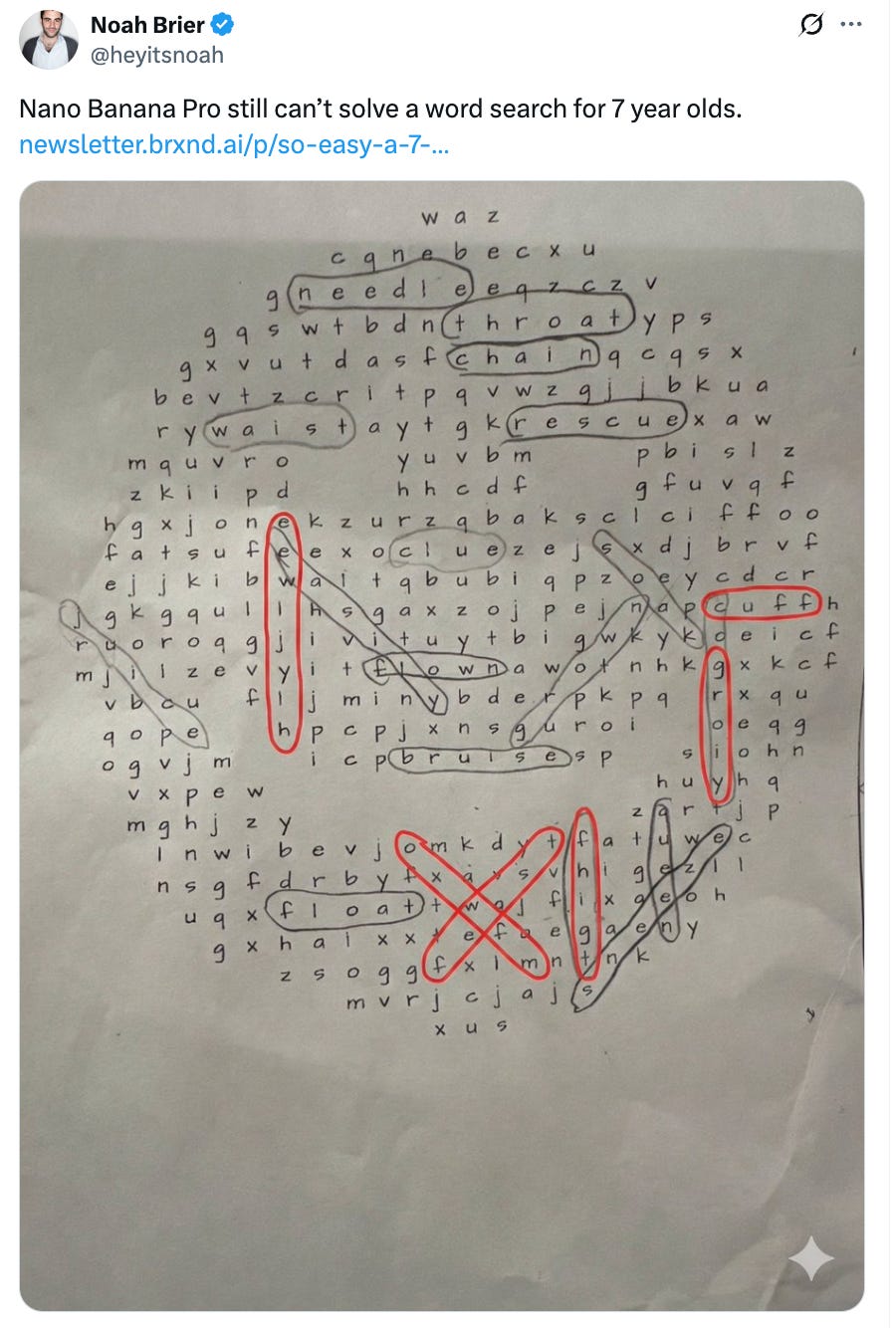

Speaking of Gemini 3, we’ve been playing around with it a lot this week:

Gemini 3 is definitely worth playing around with, but the jury is still out on whether it’s a step change or just another example of standard (albeit exponential) progress in AI development.

Happy Thanksgiving! If you have any other questions, please be in touch. As always, thanks for reading.

Noah and Claire