The AI Usage Gap // BRXND Dispatch vol 89

Plus, answering your anonymous questions!

You’re getting this email as a subscriber to the BRXND Dispatch, a newsletter at the intersection of marketing and AI. We’re hosting our next BRXND NYC conference on September 18, 2025, and are currently looking for sponsors and speakers. If you’re interested in attending, please add your name to the waitlist. We plan to open up tickets publicly soon.

What's On My Mind This Week

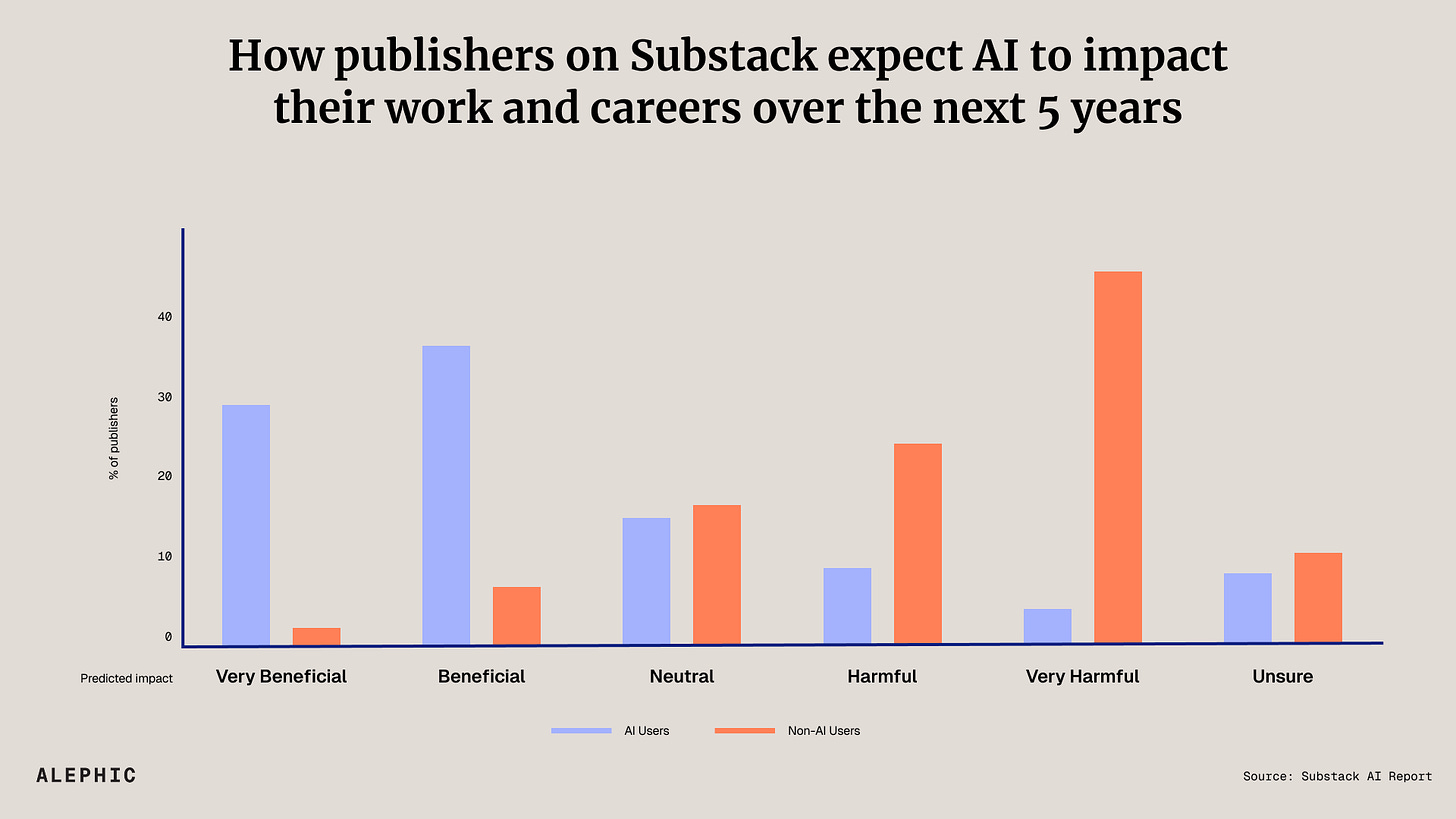

Noah here. This might be the best chart I’ve seen yet in the world of AI.

It’s from Substack’s AI Report, and while it is focused on publishers, the message is exactly the same for the corporate world. Mainly, we are witnessing an AI divide. The thing is, this isn’t about prompting skills or ChatGPT Pro subscriptions; it’s a divide between those who have taken the time to play with AI and those who haven’t.

I wrote about this a bit in a piece titled “The Evenly Distributed Blindfold”:

I find myself having these surreal conversations where smart, accomplished people ask me when AI might be able to do things it’s already doing. This isn’t theoretical—it’s happening in boardrooms and coffee shops right now. A CMO or creative director will express genuine skepticism about whether AI can produce coherent research or quality copy. So I pull out my phone, open ChatGPT, and show them. They’re inevitably surprised, despite this technology being, literally, free.

We typically invoke William Gibson’s line here: “The future is already here—it’s just not evenly distributed.” But with generative AI, distribution isn’t the problem. Anyone with a phone can access tools more capable than most entry-level knowledge workers. ChatGPT reached 100 million monthly users just two months after launch, and OpenAI now reports over a billion registered users globally. These aren’t niche statistics—they’re mainstream adoption curves.

I wrote that in May of this year, but I could have written it in 2024 or 2023. In fact, here’s something I did write in 2023:

While much of the current AI conversation is future-based as well, part of what makes it so much more fun and exciting is that there are immediate and real applications right now. I built my little brand collab experiment using off-the-shelf AI APIs, and, at this point, a day doesn’t go by that I don’t find some new real use case for this technology. For example, just this week, I needed to do some simple URL classification—guess what type of page it is based on the URL structure—and I was able to do fine-tuning on GPT3 and use the new model to process 100,000 URLs. The accuracy, by my estimation, is well above 95%, and it took about 20 minutes. That’s crazy!

I continue to believe a few things about AI:

It’s weird: this is the most counterintuitive technology of our lifetimes in the most literal sense of the word. We have built up literal years of intuition for deterministic computing, and now we have this strange probabilistic thing dropped on our laps.

It’s not hard: while it may be weird, it’s not complicated to learn. I continue to believe in my bicycle analogy—you start a little wobbly, fall off a few times, and then forget that it was ever complicated.

It’s just the beginning: I have another article in the hopper about how I think executives and companies are fundamentally wrong about where in the lifecycle AI is and why that matters so much. I believe we’re in year one or two of a 10-15 year transformation. If that’s true, we shouldn’t be treating this as a mature technology with a bunch of AI bureaucracy surrounding it, but rather be focused on getting people hands-on experience so they can learn to ride the bike.

It’s underhyped: I know this sounds crazy given the amount of hype, but when I continue to walk into rooms with executives who clearly have less than 5 hours hands on keyboard with AI, I can’t help but believe it’s actually underhyped. What’s so amazing about this technology is the fact that it’s the ultimately horizontal technology, and therefore any use case can apply. I often do something that I’m fairly certain I’m the first person to try with AI. You can only get there with hands on keyboards.

If you’ve tried all this and still don’t think AI works for you, I’d love to hear about it. In all my travels and conversations over the last four years, I’ve yet to meet that person. If you’re an exec trying to figure out why your org isn’t adopting AI, look at that chart and look back at yourself: have you put in the hours to understand it? If not, that’s where you should start.

There’s a great quote from Andy Grove in Only the Paranoid Survive about how to treat a strategic inflection point:

Resolution of strategic dissonance does not come in the form of a figurative light bulb going on. It comes through experimentation. Loosen up the level of control that your organization normally is accustomed to. Let people try different techniques, review different products, exploit different sales channels and go after different customers. Much as management has been devoted to making and keeping order in the company, at times like this they must become more tolerant of the new and the different. Only stepping out of the old ruts will bring new insights.

The operating phrase should be: “Let chaos reign!”

What’s so rare about this particular strategic inflection point is that it doesn’t require millions in capex (by you at least), it just requires a bit of initiative to download ChatGPT on your phone and make yourself use it. I’m a firm believer that the train hasn’t left the station on AI and everyone can still catch up, but I am also becoming less and less patient with the excuses from people who haven’t spent the time to get familiar.

Anonymous AI Question of the Week:

As a reminder, we started an Anonymous AI Question submission to ask all the questions you’re too afraid to ask. Submit yours now! Here’s our first question, thanks to “anonymous” for the submission:

"What are the best prompts for preventing AI from being “lazy”? Fear? Rewards? Thoroughness?" - Anonymous

I like that this was our first question in part because I don't know the answer (and, to my knowledge, no one else does either). I suspect it's probably very context-dependent. For instance, some experiments show that LLMs write longer, more detailed answers when the prompt teases an imaginary reward (“I’ll tip you $1,000 if this is perfect”) and when you trick them out of their reported December “winter-break slump” by telling the model it’s May instead. With that said, one simple thing is just to tell it the thing you're thinking (like your significant other, they can't read your mind). Also, giving examples of what you are looking for (few-shot prompting) never hurts (be a token maximalist!). A related best practice is to let it know that it's ok to not know the answer, and it should be clear when that's the case. Also, just use better models! Too many people run their heads into a wall trying to get 4o to do something when o3 is waiting for you (hopefully one day OpenAI will figure out how to not make you know the difference, but for now that's not the case).

If you have any questions, please be in touch. If you are interested in sponsoring BRXND NYC, reach out, and we’ll send you the details.

Thanks for reading,

Noah and Claire